- Blog

- 03.16.2020

- Data Fundamentals

Moving Google Analytics 360 Data into Snowflake

If you are a Google Analytics 360 (GA 360) user, you can definitely enjoy the conveniences of having everything under the Google umbrella, including Google Big Query. With GA360, you can export sessions and bring data from a Google Analytics 360 account into Google BigQuery, and then use a SQL-like syntax to query all of that analytics data.

But often, you need to get that GA 360 data into a different cloud data warehouse (CDW) if you have a different primary cloud data warehouse, or if you need that analytics data for an application running in another CDW. If that CDW is Snowflake, Matillion ETL has a way to make that happen.

Getting Google Analytics 360 data from Google BigQuery into Snowflake

Matillion ETL has an integration for Google BigQuery that allows users to query data from Google BigQuery and load it into Snowflake. When transferring large amounts of data, there is an alternative workflow that leverages Snowflake’s ability to load data directly from files in Google Cloud Storage (GCS) buckets.

Here’s how you can export GA360 data from Google BigQuery and load it into Snowflake using Matillion ETL for Snowflake on a daily basis.

How it’s done

- Export GA360 data from Google BigQuery to files in a GCS bucket

- Load these files into tables in Snowflake

- Use Matillion ETL for Snowflake to orchestrate and schedule these steps to bring in new data every day

Let’s walk through the steps. In this example, I use the bq utility from Google-Cloud-SDK to export tables in Google BigQuery to files in GCS. There are other ways to accomplish this, but this method keeps it simple.

Getting started: What you’ll need

Before you begin, you’ll need to make sure that you have the following things:

Resources in GCP

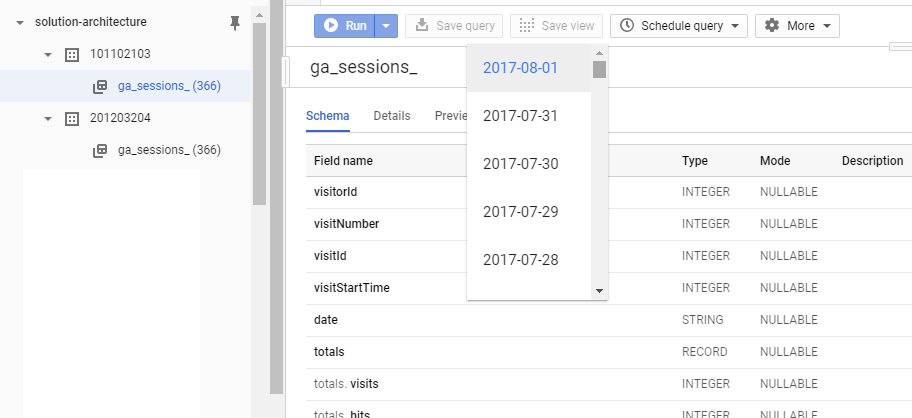

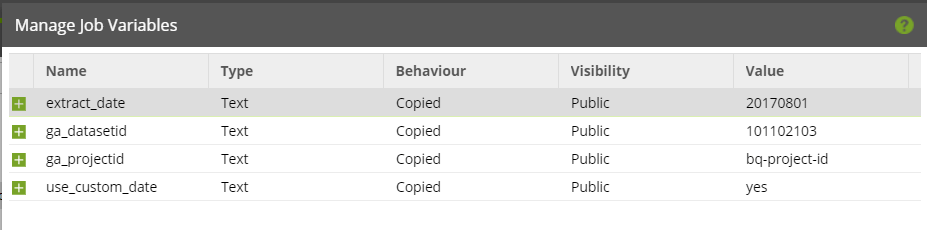

- Google BigQuery datasets holding your Analytics data from GA360 (here’s how to set that up). Once you create the datasets, they will appear in your BigQuery project, one dataset per Google-Analytics-View you choose to export. The dataset name is the view-id of the property and data for each day is available as a table by name ga_sessions_YYYYMMDD. Here’s an example based on data copied from “Google Analytics sample dataset for BigQuery” (link)

- A GCP bucket to hold files for data exported from Google BigQuery

- Sufficient rights in GCP to create a service account with access to both BigQuery and your cloud storage bucket in GCP

A Snowflake account hosted on AWS or Azure, or GCP

If it’s Snowflake on GCP, the process is much easier. But let’s use AWS here to cover a wider use case.

A Snowflake user with ACCOUNTADMIN privileges

You need this to create a Storage Integration to load data from a GCS bucket (see next section).

Matillion ETL for Snowflake, on your chosen cloud platform

In this case, AWS. You want Matillion ETL to be hosted in the same region and cloud provider as your Snowflake account.

Snowflake “Storage Integration”

Before we get started, let’s talk about Snowflake “Storage Integration” a feature that is key to this workflow.

Snowflake defines a “Storage Integration” as:

“…a Snowflake object that stores a generated identity and access management (IAM) entity for your external cloud storage, along with an optional set of allowed or blocked storage locations (Amazon S3, Google Cloud Storage, or Microsoft Azure). Cloud provider administrators in your organization grant permissions on the storage locations to the generated entity. This option allows users to avoid supplying credentials when creating stages or when loading or unloading data.”

Additional Configuration

Let’s create and configure a few other assets we need for this workflow.

- Create a GCS bucket in the same region as your BigQuery Project. I’ll be using mtln_ga360_rawdata for this example.

- Create a GCS Storage Integration in Snowflake (link). This step involves creating a GCS Storage Integration, a service account in GCP and assigning privileges to the service account to access GCS bucket above the Google BigQuery project.

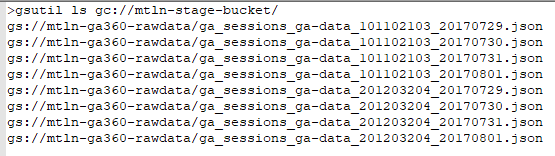

- Install and configure Google Cloud SDK on the Matillion server. We will be using bq extract to extract and gsutil to (optionally) move/archive processed files.

The Matillion ETL Job

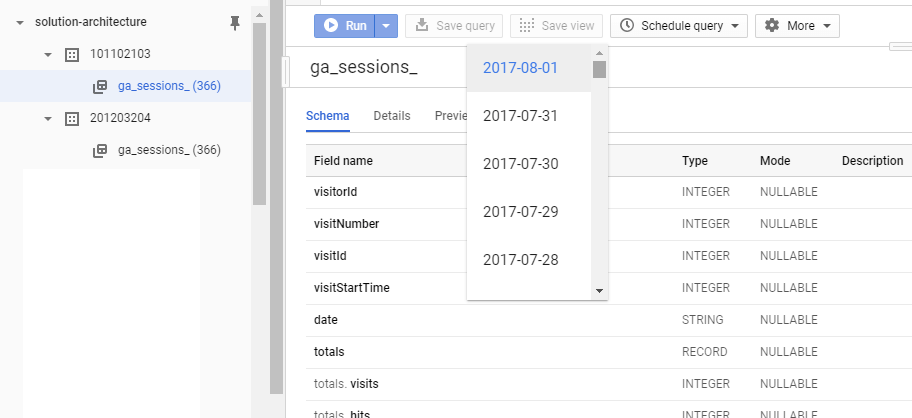

Here’s a Matillion ETL job to help with this use case:

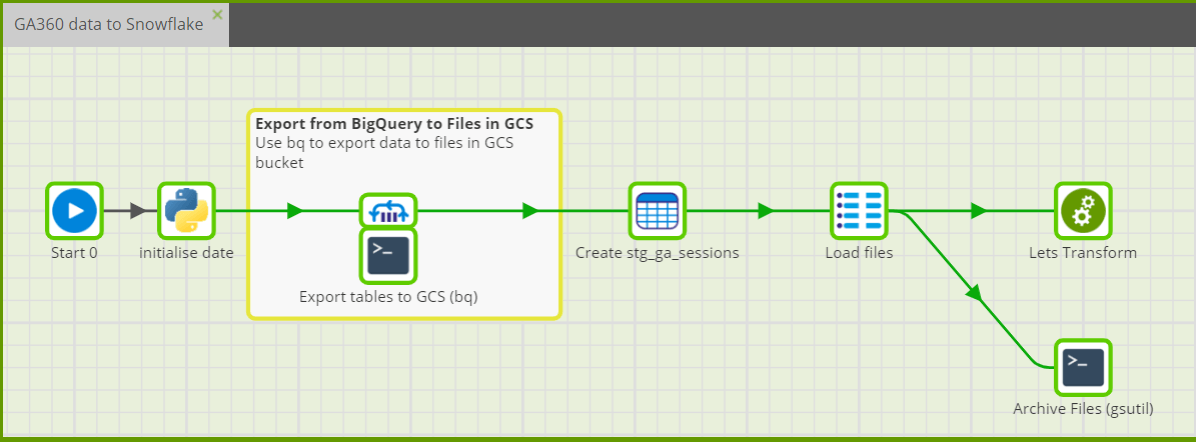

The job uses a set of variables for date-of-extract, project-id, dataset-id.

The BASH component uses a bq extract command to export/dump contents of a table to GCS as JSON files. This preserves the hierarchy in GA-data. The Grid-Iterator loops over a set of view-ids (representing properties in Google Analytics) as ga_datasetid to the script to parameterize the command.

| bq extract \ --project_id=${ga_projectid} \ --destination_format=NEWLINE_DELIMITED_JSON \ ${ga_datasetid}.ga_sessions_${extract_date} \ gs://mtln-ga360-rawdata/ga_sessions_${ga_projectid}_${ga_datasetid}_${extract_date}.json |

The BASH component is run for a set of properties controlled by the ITERATOR attached to it. This creates multiple files in the GCS bucket – one per property per day.

Next, we use the SQL SCRIPT orchestration component and issue a COPY command to load files from GCS into Snowflake. We specify the location using GCS-integration GCS_STAGE_GA360_RAWDATA.

Note that while our Snowflake account is in AWS, it is loading files in GCS bucket(s) into your database. This is possible because of the permissions we granted to the service-account configured against your GCS Storage Integration. Without the storage integration, we’d have to copy these files across from GCS buckets into an S3 bucket and then ingest them into Snowflake.

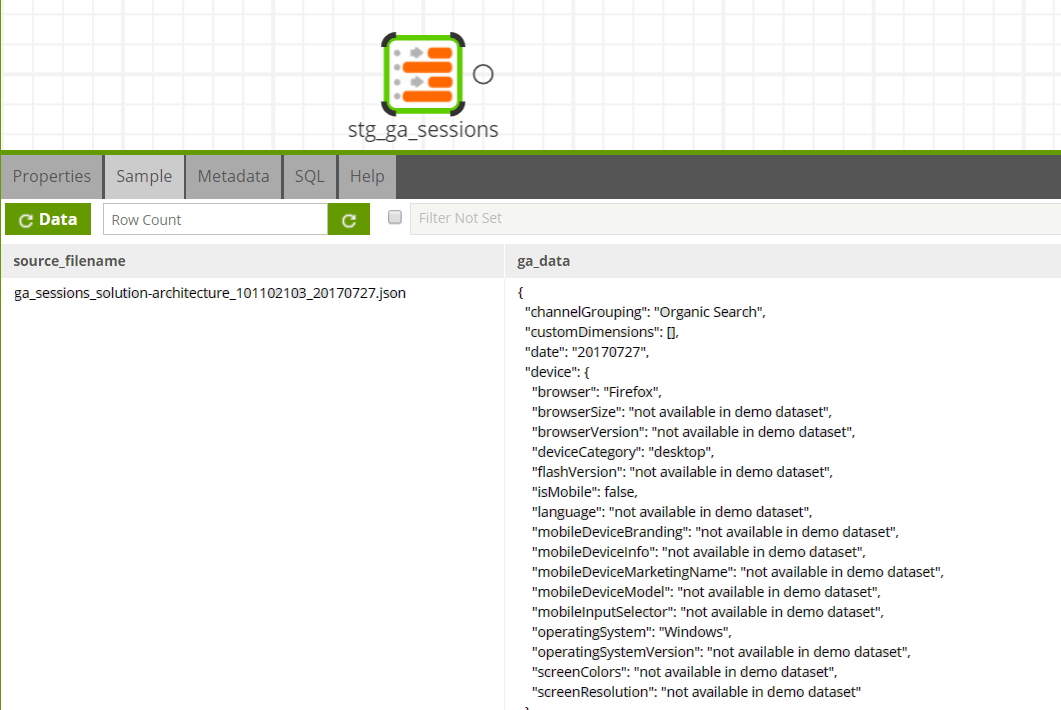

Also, note the use of metadata$filename to record the name of the file along with data. This is handy if you are loading files in bulk. Each row will have the name of the file from which it’s loaded, as indicated in the image below.

COPY INTO GA_RAWDATA.STAGE.STG_GA_SESSIONS_${extract_date} FROM (SELECT metadata$filename , parse_json($1) as ga_data FROM @GCS_STAGE_GA360_RAWDATA ) PATTERN='.*.json' FILE_FORMAT= ( TYPE=JSON);

Here’s what it looks like once loaded into Snowflake:

Next, we may want to transform that data. Check out our additional resources on Flatten-variant or Extract-Nested-Data to learn more about how you can transform your data to gain insights and create value for your business.

Featured Resources

What Are Feature Flags?

Feature flags are a software development tool that has the capability to control the visibility of any particular feature. ...

BlogHow Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

Share: