- Blog

- 04.01.2021

- Data Fundamentals

Reading Delta Lake Table Data from Matillion ETL

As more data is being stored within operational data lakes, leveraging this valuable resource is becoming vital to enterprises on all stages of their data journey. Being able to streamline data ingestion and enrichment with automated transformation workflows opens up exciting business opportunities. In this blog we’ll look at the new possibilities available to data engineers using Matillion ETL and Delta Lake.

The arrival of Delta Lake provides an alternative to the traditional approach of loading data into the Cloud Data Warehouse or making use of the external table feature available in many systems.

The connection to the Delta Lake table using the Database Query component in Matillion ETL for Delta Lake on Databricks opens up easy access to the data stored in the data lake directly through Matillion, using SQL. All you need to do is make a few configuration changes on the server running the Matillion instance. Let’s take a look at how you do that.

Install the latest Simba JDBC Driver

First, we need to ensure that we have the latest version of the Simba jdbc driver available for use on the server.

Assuming you have the driver software available, all is well. If you don’t , the driver can be downloaded from https://pages.databricks.com/ODBC-Driver-Download.html.

The driver software should be located in /usr/share/emerald/WEB-INF/lib. At the time of writing the latest available version was the 2.6.16 release (file spark-jdbc-2.6.16.1020.jar).

Configure the Data Source

Once you have an available jdbc driver, you can configure the Matillion ETL instance to allow access to the Delta Lake platform as a data source.

The data sources available within Matillion are controlled through the jdbc-providers.properties configuration file, which can be found in /usr/share/emerald/WEB-INF/classes. Navigate to this directory and open the file with your preferred editor.

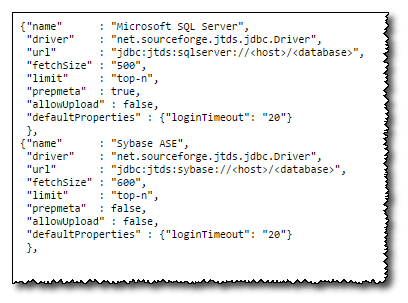

In the editor, you will see a JSON file with the repeating elements—a set for each driver will be present.

In order to make Delta Lake available to the Matillion instance, the following information should be included within the configuration file.

,

{"name" : "Delta Lake",

"driver" : "com.simba.spark.jdbc.Driver",

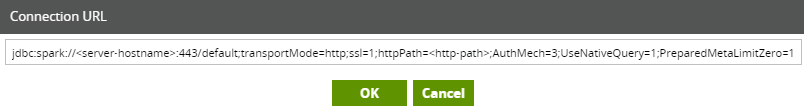

"url" : "jdbc:spark://<server-hostname>:443/default;transportMode=http;ssl=1;httpPath=<http-path>;AuthMech=3;

UseNativeQuery=1;PreparedMetaLimitZero=1",

"fetchSize" : "500",

"limit" : "limit-outer",

"allowUpload":false}Note the comma prior to the first bracket – this should follow the closing bracket of the previous entry in the file.

Save this modified version of the file.

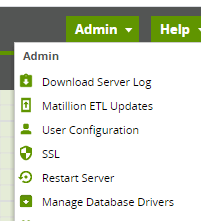

Now, return to the Matillion GUI and, from the Admin dropdown menu (upper right hand corner of the screen) select Restart Server – this will read in the updated configuration settings.

Connect the Data Source

At this point we now have a Matillion instance that is configured to access data held within a Delta Lake source so all that remains is to specify how to connect to the specific data source we want to use.

To make this connection, we will need to log into our Databricks account and open the Clusters tab. Alternatively, select the Databricks SQL link and select the Endpoints tab.

From the displayed list of clusters / SQL Endpoints select the cluster / endpoint you want to use as the computing resource when extracting data from your Delta Lake database.

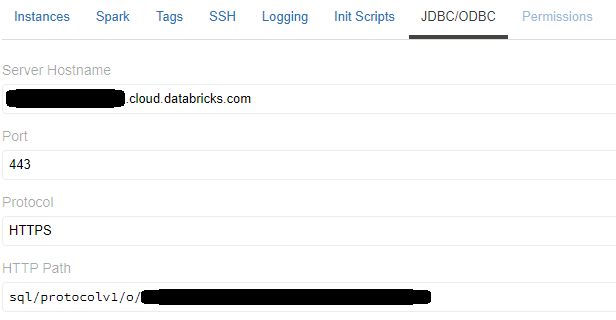

For standard clusters, you should expand the Advanced Options and click the JDBC/ODBC tab.

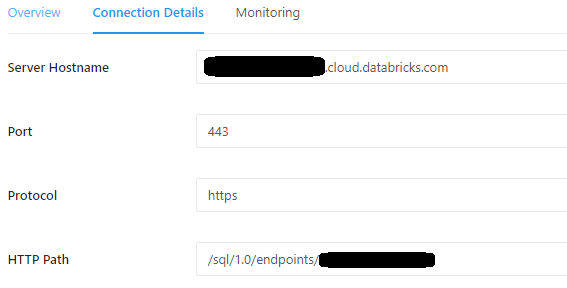

In the case of an endpoint, simply expand the Connection Details tab.

In both cases, make a note of the Server Hostname and also the HTTP Path entry.

Note that the server hostname domain will differ depending upon the cloud provider hosting your Databricks installation.

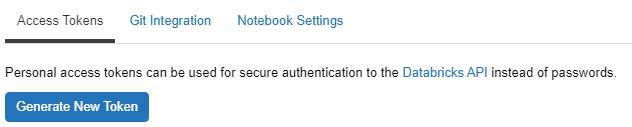

Finally, click on the Account icon within the Databricks portal (upper right hand corner of the window () and select User Settings from the dropdown list. Select Access Tokens from the user settings screen and click the Generate New Token button.

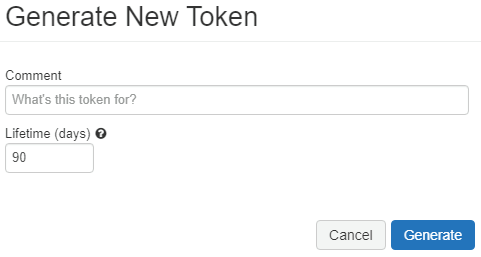

This will dispaly the following dialogue box:

Enter a meaningful name for the new token and provide the number of days for which the token will be valid (or accept the default provided). Click the Generate button to create the new token.

At this point, a window will appear displaying the requested token. You must make a copy of this token as it will not be possible to retrieve these details once this window is closed.

Now return to your Matillion GUI.

If you don’t already have one available, create an Orchestration Job and add a Database Query component to the canvas.

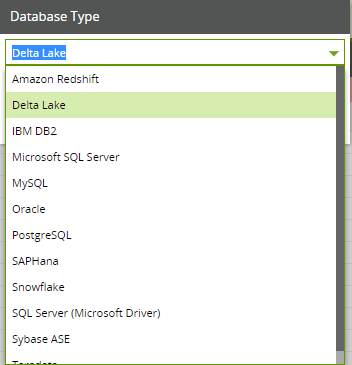

Open the Database Type property for the component and select Delta Lake from the subsequent dropdown list.

The Connection URL will now change to show the expected structure needed to make the connection to you Delta Lake database. Open this property now.

Replace the <server-hostname> and <http-path> placeholder text with the details noted earlier from your Databricks account and click OK.

Open the Username property and set this to the value token.

Open the Password property and, using your preferred delivery method (password manager, variable or storage within the component) set the password to the token generated from within your Databricks account.

Load the Required Data

With the configuration now complete, you can use the Database Query component as you would for any other database source.

One detail to keep in mind when using this method to obtain data from Delta Lake sources is the slight difference in terminology being used. When using Delta Lake the term schema is used to refer to what we would normally describe as a table in, for example, Snowflake. In fact, within Delta Lake there is no concept that directly relates to a schema in our traditional data warehouses.

As such, when using the component in basic mode you should use the Data Schema property to select the name of the Delta Lake database within which the table you wish to query resides. Once selected, the Data Source property will list the tables as expected.

Faster time to business value

Now you should be able to access Delta Lake table data directly from within Matillion ETL. With this capability, your developers can focus more on the transformation of the data into business information and worry less about the location and structure of the raw data source.

Learn More About Matillion ETL for Delta Lake on Databricks

Does your enterprise use Delta Lake on Databricks? Learn more about how Matillion ETL for Delta Lake on Databricks can help your data team be more productive and bring faster time to business value for your whole organization.

Request a demo

Featured Resources

Enhancing the Data Productivity Cloud via User Feedback

Find a data solution that allows you the option of low-code or high-code, extreme amounts of flexibility, tailored for cloud ...

NewsVMware and AWS Alumnus Appointed Matillion Chief Revenue Officer

Data integration firm adds cloud veteran Eric Benson to drive ...

BlogHow Matillion Addresses Data Virtualization

This blog is in direct response to the following quote from ...

Share: