- Blog

- 07.21.2017

Using the S3 Put Object Component in Matillion ETL for Amazon Redshift

The S3 Put Object presents an easy-to-use graphical interface, enabling you to connect to a remote host and copy files to an S3 bucket. This can be used to copy files from a number of common network protocols to a specific Amazon S3 bucket. Many customers use this to transfer external files to S3 before loading the data into Amazon Redshift from S3.

The connector is completely self-contained. No additional software installation is required. It’s within the scope of an ordinary Matillion license, so there is no additional cost for using the features.

Video

Watch our tutorial video for a demonstration on how to set up and use the S3 Put Object Component in Matillion ETL for Amazon Redshift.

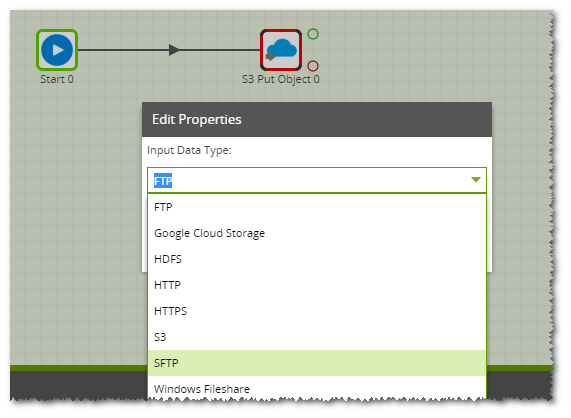

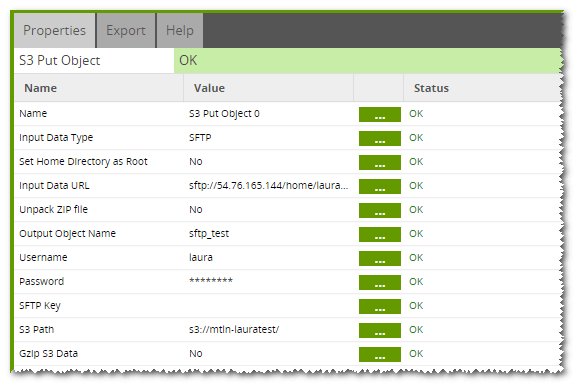

Input Data Type

Currently Matillion ETL for Amazon Redshift will connect to the below list of protocols. This article will look at connecting to an sFTP site as an example. However the functionality of all the protocols are similar.

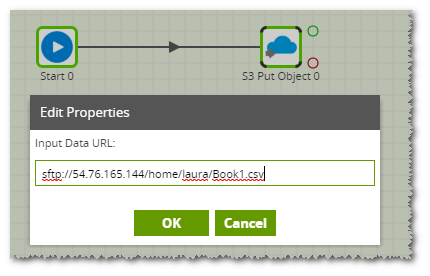

Input Data URL

This is the location of the file to be copied, including file name. The component will support any file types and will even unzip files if required. However it will only copy one file from the source to the S3 Bucket. The username and password for the sFTP site can be declared in the URL or can be specified further down.

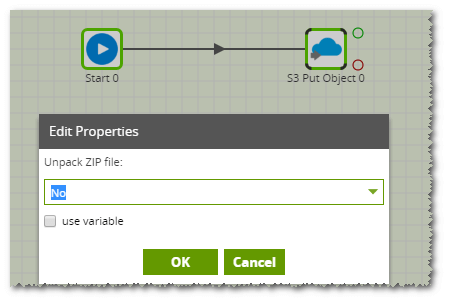

Zipped Files

The S3 Put Object can unzip files as required using the Unpack ZIP file property. As Amazon Redshift doesn’t support the loading of zipped files, this functionality is often used to unzip a file before loading the file from S3 into Redshift using an S3 Load component. This can be done even if the source file is in S3 by selecting the Input Data Type as S3.

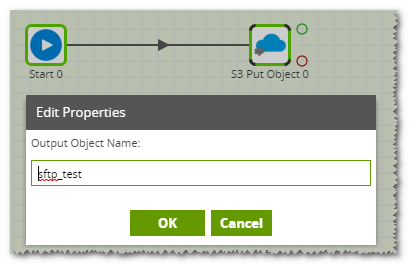

Output Object Name

This is the name of the file to be created in the S3 bucket specified in the S3 Path. This does not need to have the same name as the source file. A new file will be created with this name. Please note that if a file with this name already exists in the target S3 bucket, this file will be overwritten.

Authentication

The S3 Put component supports authentication to the sFTP site either by username and password or by key exchange. If using an SFTP key, the key can be copied directly in the SFTP Key property in Matillion ETL for Amazon Redshift.

S3 Path

This is the destination bucket for the copied file. The selecter allows you to choose from an S3 Bucket in your AWS account. A public S3 bucket can be specified but you will need to have write access to it.

We recommend you GZip any large files to improve the performance of the component. This is done using the GZip S3 Data property.

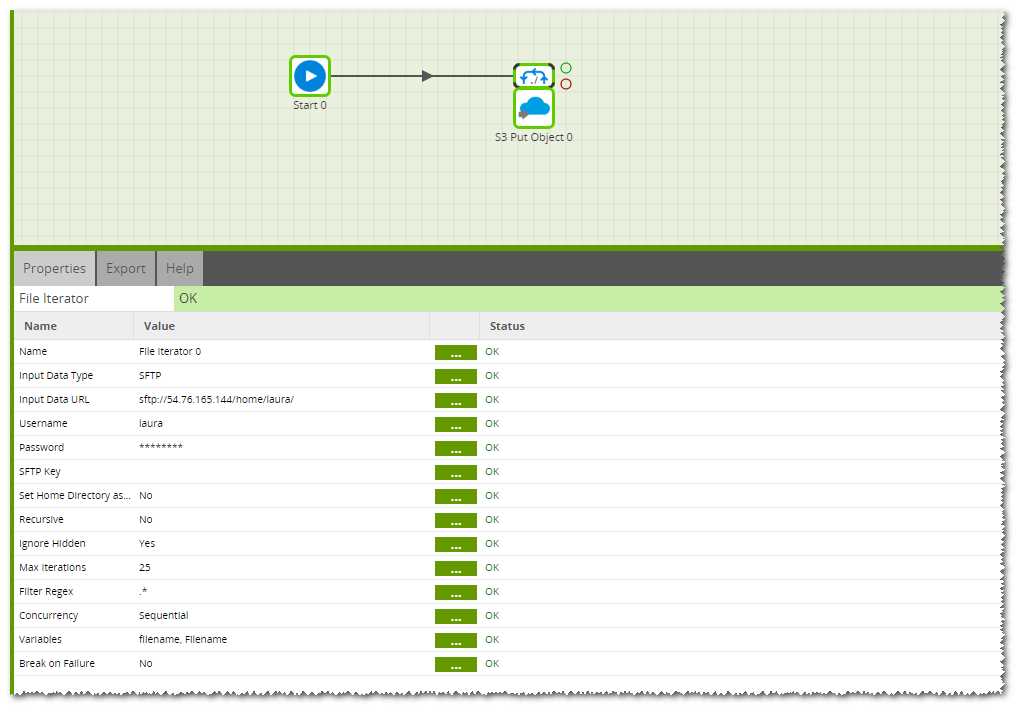

Copying More Files

As mentioned above, the S3 Put Object will only copy one file at a time however this component can be used in conjunction with a File Iterator to loop through all files which exist on the sFTP site and the S3 Put Object can be used to copy these files to the target S3 bucket. This is configured by setting up the File Iterator to write the filename to a variable and then using the variable values in the S3 Put Object in the Input Data URL and as the Output Object Name. Further details on the File Iterator Component is available here.

Conclusion

We have looked at how to transfer data in a file from an external source to an S3 Bucket using Matilion ETL for Amazon Redshift. Once the file is available in the S3 Bucket the data from the file can be loaded into Redshift using the S3 Load Component. The power of Amazon Redshift can then be used to transform and analyse the data.

Useful Links

S3 Put Component in Matillion ETL for Amazon Redshift

Integration information

Video

Download our free eBook for loads of useful advice and best practices on how to optimize your Amazon Redshift setup

Featured Resources

How Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

BlogBuilding a Type 2 Slowly Changing Dimension in Matillion's Data Productivity Cloud

A Slowly Changing Dimension (SCD) is a dimension that stores and manages both current and historical data over time in a data ...

Share: