- Blog

- 08.03.2022

- Data Fundamentals, Dev Dialogues

Data Mesh with Matillion

Data Mesh is an architectural approach, involving people, processes, and technology. Its goal is to help design data platforms that can provide value in a scalable way.

This article will explain the principles of Data Mesh for Matillion users, developers, and architects. Additionally, it will provide guidance on using a Data Mesh architecture with Matillion.

We are used to hearing all about data being the new oil. Why is that? Because it is valuable. The Data Mesh reasoning is that if something is valuable, it needs to have an owner. And, in fact, preferably more than one owner.

Prerequisites

The prerequisites for participating in a Data Mesh architecture with Matillion are:

- Access to Matillion ETL and Matillion Data Loader.

- Multiple data sources - as we will explain, Data Mesh is a more appropriate strategy when multiple sources of data are being used.

- Alignment across the organization - in cases where certain definitions need to be standardized across the business, the teams that make up the Data Mesh need to be politically ready to cooperate.

Data Mesh Explained

The key to Data Mesh is to elevate "data" to the status of a product. We often think about "product" in the context of external customers. Whereas with Data Mesh, data is an internal product that meets internal needs.

With this recognition of value, data becomes a top-level entity in itself — rather than something that mysteriously lurks in the background in support of other efforts.

Being a product implies that data has an owner. The owner is responsible for ensuring that the data is fit for purpose, and for adapting it as needs change.

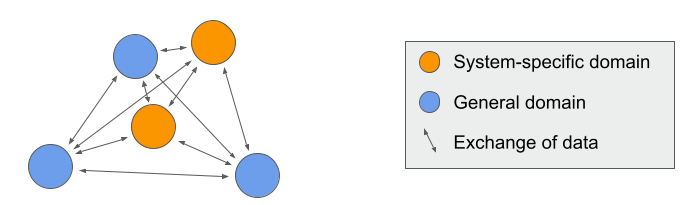

Experience has shown that it is inefficient to try and centralize all data ownership in a single monolithic application. The necessary combination of business knowledge and technical expertise is difficult to scale and causes bottlenecks. No single role can own all the data. In a Data Mesh, responsibility is split out into areas known as domains.

Distributed ownership means that the domain data owners are responsible for making their data accessible and highly consumable for everyone else. This is what makes it a mesh rather than a hub-and-spoke model.

As the number of domains increases, Data Mesh would tend to become an unmanageable spider web without one more important factor; discoverability.

Rather than jealously guard their data asset and hide it away, data product owners must ensure it is easy to access and easy to consume - ideally through self-service. This is part of a good data culture, and includes:

- Documenting what is available

- Providing simple - and ideally automatable - means of access

- Taking part in centralized governance and infrastructure

In this way, a scalable set of distributed, discoverable data is made available to meet the internal data needs.

Data Mesh vs Data Warehouse

As a monolith with a single owner, discoverability should never be an issue for a centralized data warehouse. Despite this, process-oriented thinking means that data swamps can still be a risk - especially when data is simply collected without transformation or integration.

For a data warehouse it is relatively easy to enforce the use of centralized and standardized definitions known as "conforming" dimensions. With a conformed dimension in place, whenever anyone in the organization talks about a certain "by" criteria, they are all referring to one single, common definition.

In contrast, if domain owners within a Data Mesh attempt to create conformed dimensions, they are likely to hit two difficulties:

- Political - asserting and enforcing ownership

- Practical - DataOps dependencies and constraints, which can exacerbate the above

It is technically fine to go ahead with different domains using their own (probably all slightly different) definitions of a word. This is known as "polysemy". But without conformed dimensions, there are two big disadvantages that can reduce the value of the data:

- It is impossible to drill "across" from one domain to another reliably

- Reports from different domains on the same subject tend to disagree

To address these difficulties, a successful Data Mesh must ensure the political will exists to endorse and enforce the use of conformed dimensions wherever they are appropriate.

For a Data Mesh product owner, "discoverability" must include all the DataOps tools to allow consumers to deal with the practicalities of interacting with a conformed dimension. In particular, a methodology for handling late arriving dimensions is needed.

Building a Data Mesh with Matillion

Data Mesh differentiates between two kinds of domains:

- source-oriented - tightly coupled to one individual operational system

- consumer-oriented - more aligned with the needs of consumers, so usually reading from multiple data sources and involving data transformation and integration

In a typical multi-tier data architecture, a source-oriented domain maps to an Operational Data Store layer (ODS).

In contrast, a consumer-oriented (or "shared") domain is designed to maximize consumability while keeping data quality high. This maps closely to a Star Schema layer.

Reporting/analytics teams and data science teams can both take advantage of a consumer-oriented domain. Both need the same underlying data, so what is really the "product" here?

There is an argument that the product is neither a regression analysis nor a dashboard. Instead, the product is the data: the statistics and visualizations are downstream artifacts.

To support this, consider virtualizing your star schemas, and treating the 3NF or Data Vault layer as the consumer-oriented domain. Shifting the product definition up one layer can make it easier for everyone to share the same data in the way that works best for them.

One more mode of failure that Data Mesh sets out to tackle is an overreliance on siloed data engineers. It is more efficient to set up blended teams that mix subject matter expertise and technology expertise. Furthermore, it is helpful if huge amounts of technical expertise are not actually mandatory for data pipeline development.

Using a Low-Code / No-Code platform is a great enabler for fast and reliable iteration. This helps Data Mesh teams regard changes to information processing as an opportunity, not a threat.

Next Steps

Creating logical data layers is highly recommended, even if you are not planning to use a Data Mesh architecture. This article on Data Vault vs Star Schema vs Third Normal Form describes how different kinds of data models tend to work best in different data layers. The article also describes a bronze-silver-gold consumability framework.

Is "coffee" the same as "espresso"? :-) More discussion on polysemes and internal data sharing in this article on data culture.

This article on time variance describes how to implement all the common history tracking methods using Matillion, and includes a discussion on virtualized dimensions.

More about how to take best advantage of a Low-Code / No-Code platform.

Ian Funnell

Data Alchemist

Ian Funnell, Data Alchemist at Matillion, curates The Data Geek weekly newsletter and manages the Matillion Exchange.

Featured Resources

How Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

BlogBuilding a Type 2 Slowly Changing Dimension in Matillion's Data Productivity Cloud

A Slowly Changing Dimension (SCD) is a dimension that stores and manages both current and historical data over time in a data ...

Share: