- Blog

- 08.15.2019

Ask a Solution Architect: Can I execute jobs from the Matillion API?

What’s it like to be a Solution Architect at Matillion? For one thing, you get asked lots of questions. Many of them are interesting, and many are common enough that we want to share the answers here. Hence, our first installment of “Ask a Solution Architect.” This week, Solution Architect Veronica Kupetz explains how to execute jobs from the Matillion API. Read on.

Q: I just started using Matillion and want to run jobs outside of the built-in scheduler. Does Matillion provide an API and if so, can I use it to execute jobs?

A: Yes – Matillion does provide an API that can execute Orchestration Jobs, including the ability to accept variables as job execution parameters.

Why execute jobs outside of the Matillion user interface? Although Matillion provides a built-in job scheduler, there may be a need to execute jobs outside of the UI. For example, you could do this when working with other processes or systems that are currently scheduled to run on an operating system (OS) scheduler or from a scheduling tool. If this process is already in place, there may be no need to schedule or run jobs via the Matillion UI.

In addition, the API can perform other Matillion-related tasks, including accessing task history and exporting projects to name a few. Here is a link to those endpoints with additional information.

Executing an Orchestration Job Using the Matillion API

There are several ways to execute an Orchestration Job using the Matillion API. How you do it depends on your requirements and your ETL workflow. Each of the examples below will help you run the respective jobs.

Using the CURL command

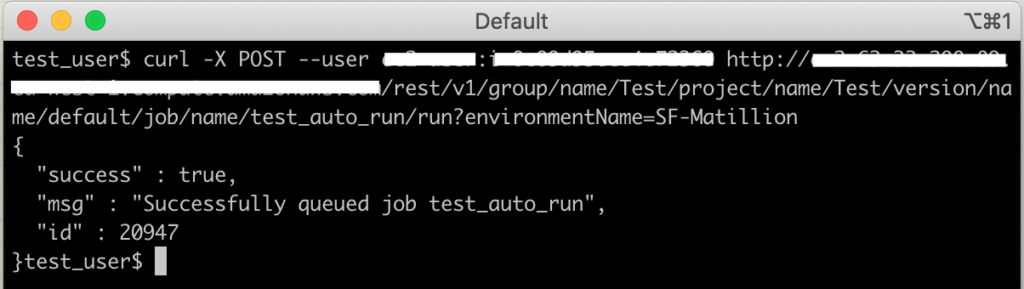

CURL command invoking the HTTP POST method

This method can be used to test the command or execute one-off Orchestration Jobs. There are other options to test as described below, but this command can be incorporated in a script where it can be set to run on an OS scheduler or other scheduling tool if required.

curl -X POST –user <api_user>:<api_user_password>

http://<instance_address>/rest/v1/group/name/<group_name>

/project/name/<project_name>/version/name/<version_name>

/job/name/<orchestration_job_name>/run?environmentName=<environment_name>

NOTE: When running the CURL command invoking the HTTPS POST method, you will need to pass the “–insecure” option. The “–insecure” option tells CURL to ignore HTTPS certificate validation. You’ll want to do the same in the second example.

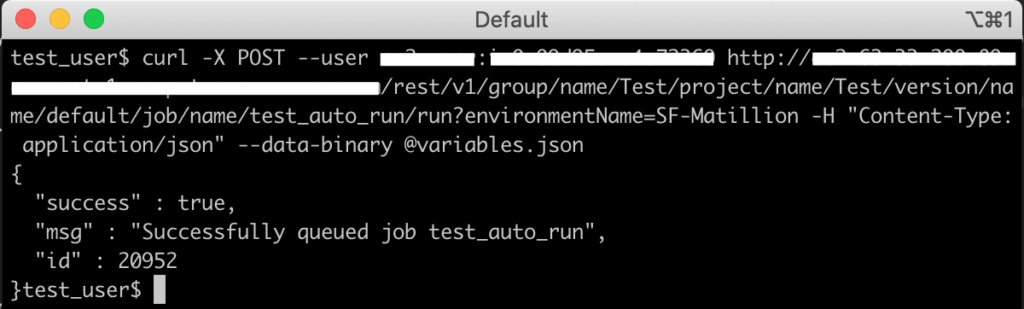

CURL command invoking the HTTP POST method and passing values for variables

This method is similar to the first one, but it is passing job or environment variable values extracted from a JSON file that are needed in the Matillion Job.

curl -X POST –user

<api_user>:<api_user_password>

http://<instance_address>/rest/v1/group/name/

<group_name>/project/name/<project_name>

/version/name/<version_name>/job/name/

<orchestration_job_name>

/run?environmentName=<environment_name>

-H “Content-Type: application/json”

–data-binary @variables.json

Here is an example of the “variables.json” file that contains the values that need to be passed for the job or environment variables:

{

“scalarVariables”: {

“target_schema”: “TEST”,

“target_table”: “test_airport2”

}

}

CURL command invoking the HTTP POST method and passing values for variables (variable values in the command)

In this method, you are also using the CURL command, but you are passing variable values within the command itself that are needed in the Matillion Job.

curl -X POST –user <api_user>:<api_user_password>

http://<instance_address>/rest/v1/group/name/<group_name>

/project/name/<project_name>/version/name/<version_name>

/job/name/<orchestration_job_name>

/run?environmentName=<environment_name> -H

“Content-Type: application/json” –data-binary

‘{“scalarVariables”:{“target_schema”:”TEST”,”target_table”:”test_airport2″}}’

Using Postman

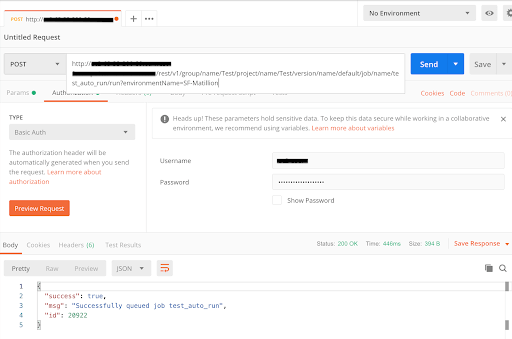

Using Postman to execute an Orchestration Job

Postman is a helpful tool for testing and developing REST and SOAP APIs. You can test GET, PUT, POST and many other types of methods as well. When using Postman, you will need to reference the username and password for authentication.

- Select the “Authorization” tab

- Under Type, select “Basic Auth”

- Enter the Username

- Enter the Password

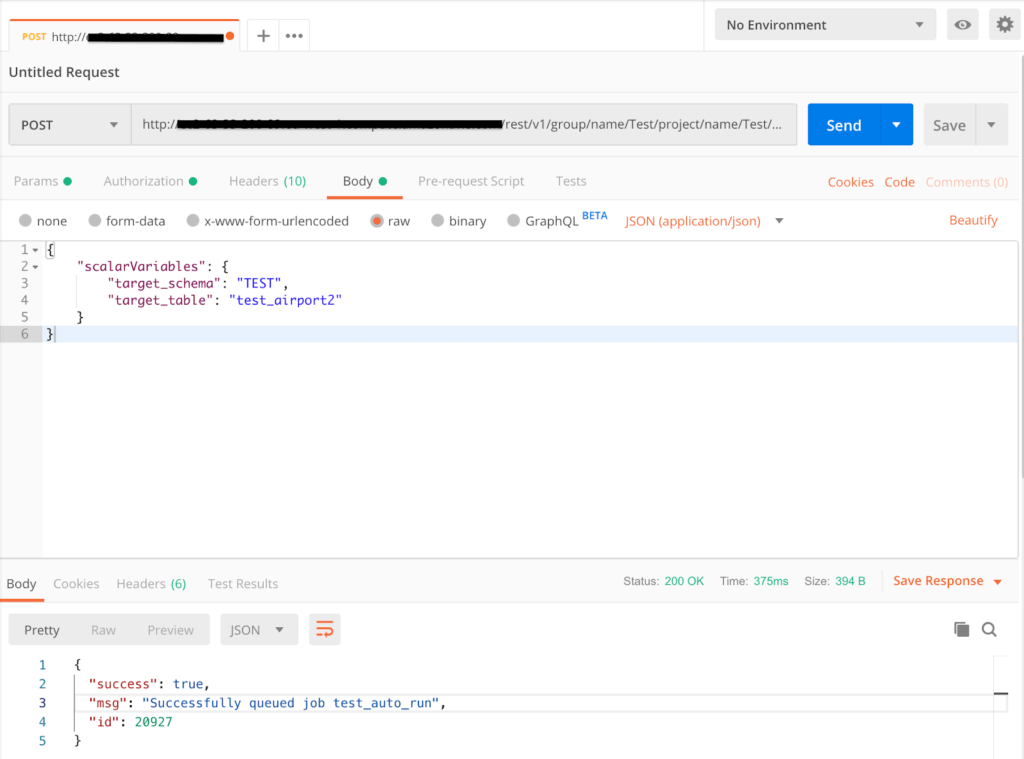

Executing Orchestration Jobs and passing variable values via Postman

You can also pass job or environment variable values when running a POST command via Postman.

- Select the “Body” tab

- Change the values to “raw”

- Select “JSON (application/json)” and enter the values to pass through the POST request

- Don’t forget to add the username and password in the “Authentication” tab

Using a Bash Script

Calling the Matillion API in a Bash Script

Here is an example of the Matillion API being called in a Bash Script. This can be modified to pass the values of the instance name, project name, etc as variables during run time.

export JPATH=”https://<instance_address>/rest/v1/group/name/<group_name>/project/name/<project_name>/version/name/<version_name>/job/name/<orchestration_job_name>/run?environmentName=<environment_name>”

# replace spaces with %20

export JPATH=”${JPATH// /%20}”

# Launch job

curl -X POST –insecure \

-u <api_user>:<api_user_password> \

“$JPATH” \

-H ‘Content-Type: application/json’ \

-d @- << EOF

{

“scalarVariables”: {

“target_schema”: “TEST”,

“target_table”: “test_airport2”

}

}Using a Python Script

Calling the Matillion API in a Python Script with variable values in a JSON file

Here is an example of the Matillion API being called in a Python Script using the requests package. This can be modified to pass the values of the instance name, project name, etc as variables during run time. In addition, if you want to run via HTTPs, you will need to add “verify=False” in the script. The variables.json file contents are referenced above.

import requests

headers = {

‘Content-Type’: ‘application/json’,

}

data = open(‘variables.json’, ‘rb’).read()

response = requests.post(

‘http://<instance_address>/rest/v1/group/name/

<group_name>/project/name/<project_name>/version/name/

<version_name>/job/name/<orchestration_job_name>

/run?environmentName=<environment_name>’,

headers=headers, data=data, auth=(‘<api_user>’, ‘<api_user_password>’))

Calling the Matillion API in a Python Script with variable values in Script

Here is another example of the Matillion API being called in a Python Script using the requests package. In this example, we are passing the job or environment variables within the script rather than reading a file.

We have reviewed a few methods and examples to execute Orchestration jobs via the Matillion API. If you are just starting to work with submitting API calls via the POST HTTP method, it is best to test out the different options provided. Ultimately, the method you choose is based on your preference and experience. To see how you can develop jobs, run them on a schedule within Matillion or how to execute jobs via the API, request a demo.

import requests

headers = {

‘Content-Type’: ‘application/json’,

}

data = ‘{“scalarVariables”:{“target_schema”:”TEST”,”target_table”:”test_airport2″}}’

response = requests.post(‘http://<instance_address>/rest/v1/group/name/<group_name>/project/name/<project_name>/version/name/<version_name>/job/name/<orchestration_job_name>/run?environmentName=<environment_name>’, headers=headers, data=data, auth=(‘<api_user>’, ‘<api_user_password>’))

We have reviewed a few methods and examples to execute Orchestration jobs via the Matillion API. If you are just starting to work with submitting API calls via the POST HTTP method, it is best to test out the different options provided. Ultimately, the method you choose is based on your preference and experience. To see how you can develop jobs, run them on a schedule within Matillion or how to execute jobs via the API, request a demo.

Featured Resources

What Are Feature Flags?

Feature flags are a software development tool that has the capability to control the visibility of any particular feature. ...

BlogHow Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

Share: