- Blog

- 01.05.2018

Using the S3 Put Object component in Matillion ETL for Snowflake

Matillion uses the Extract-Load-Transform (ELT) approach to delivering quick results for a wide range of data processing purposes: everything from customer behaviour analytics, financial analysis, and even reducing the cost of synthesising DNA.

The S3 Put Object component in Matillion ETL for Snowflake presents an easy-to-use graphical interface, enabling you to connect to a remote host and copy files to an S3 Bucket. You can use this component to to copy files from a number of common network protocols to a specific Amazon S3 Bucket. For example, many customers are transferring external files to S3 before loading the data into their Snowflake databases.

The connector is completely self-contained: no additional software installation is required. It’s within the scope of an ordinary Matillion license, so there is no additional cost for using the features.

Video

Watch our tutorial video for a demonstration on how to set up and use the S3 Put component in Matillion ETL for Snowflake.

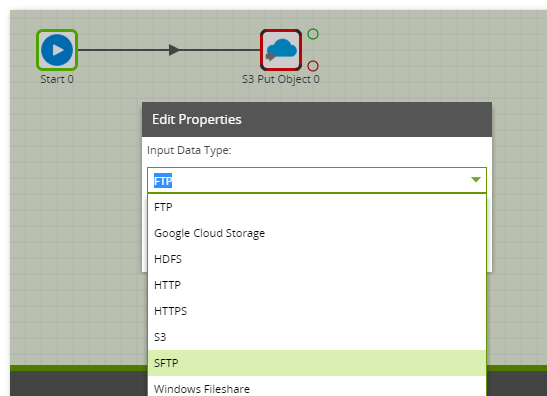

Input Data Type

Currently Matillion will connect to the below list of protocols. This article will look at connecting to an sFTP site as an example. However the functionality of all the protocols are similar.

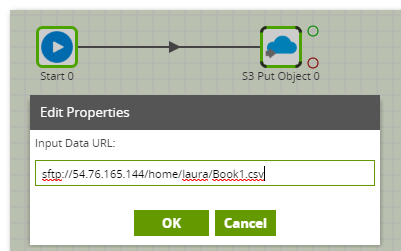

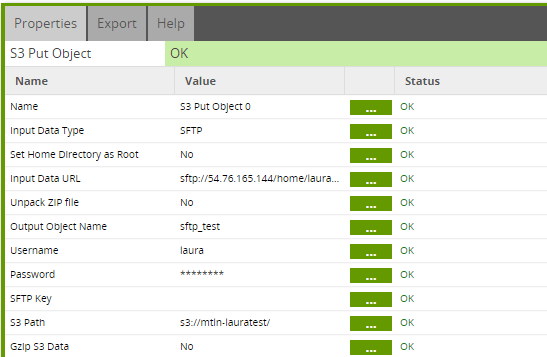

Input Data URL

This is the location of the file you want to copy, including the file name. The component will support any file types and will even unzip files if required. However it will only copy one file from the source to the S3 Bucket. You can declare the username and password for the sFTP site in the URL or this can be specified further down.

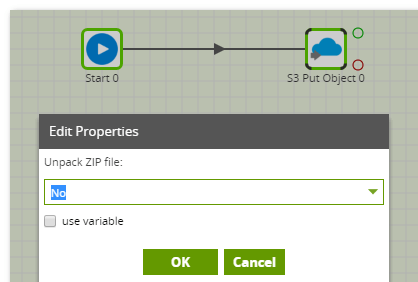

Zipped Files

The S3 Put Object can unzip files as required using the Unpack ZIP file property. Since Snowflake doesn’t support loading zipped files, you can use functionality within Matillion ETL for Snowflake to unzip a file before loading the file from S3 into Snowflake using an S3 Load component. Even if the source file is in S3 you can use this feature by selecting the Input Data Type as S3.

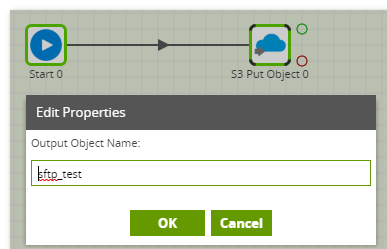

Output Object Name

This is the name of the file you will be creating in the S3 Bucket specified in the S3 Path. The output object name doesn’t need to be the same name as the source file. A new file will be created with this name. Please note that if you give a file a name already exists in the target S3 Bucket, you will overwrite the existing file.

Authentication

The S3 Put component supports authentication to the sFTP site either by username and password or by key exchange. If using an SFTP key, the key can be copied directly in the SFTP Key property in Matillion.

S3 Path

This is the destination bucket for the copied file. The selecter allows you to choose from an S3 Bucket in your AWS account. A public S3 Bucket can be specified but you will need to have write access to it.

We recommend you GZip any large files to improve the performance of the component using the GZip S3 Data property.

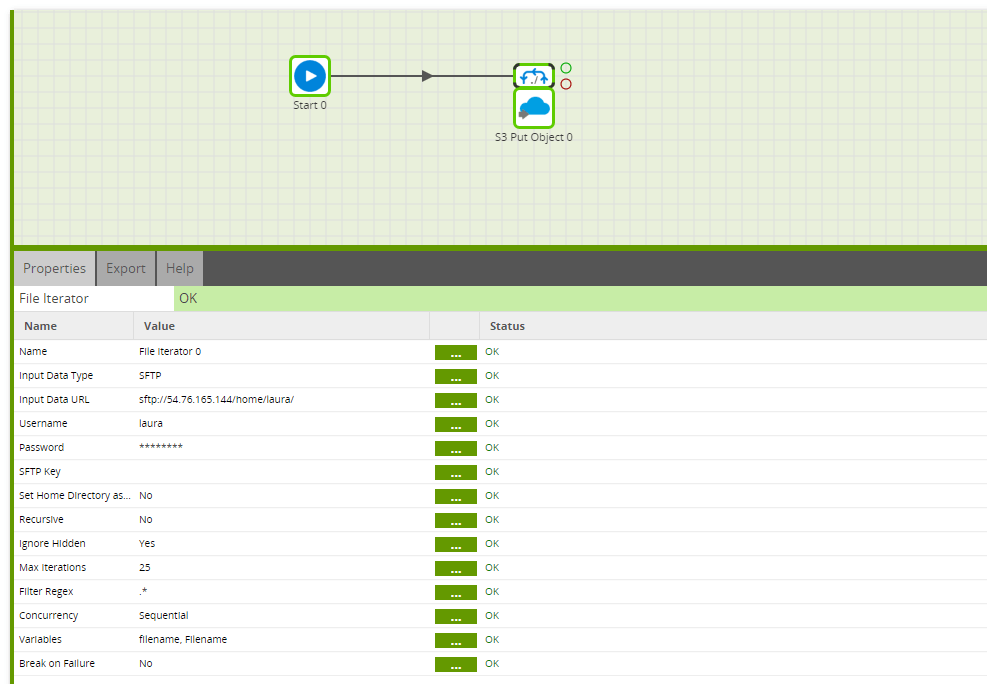

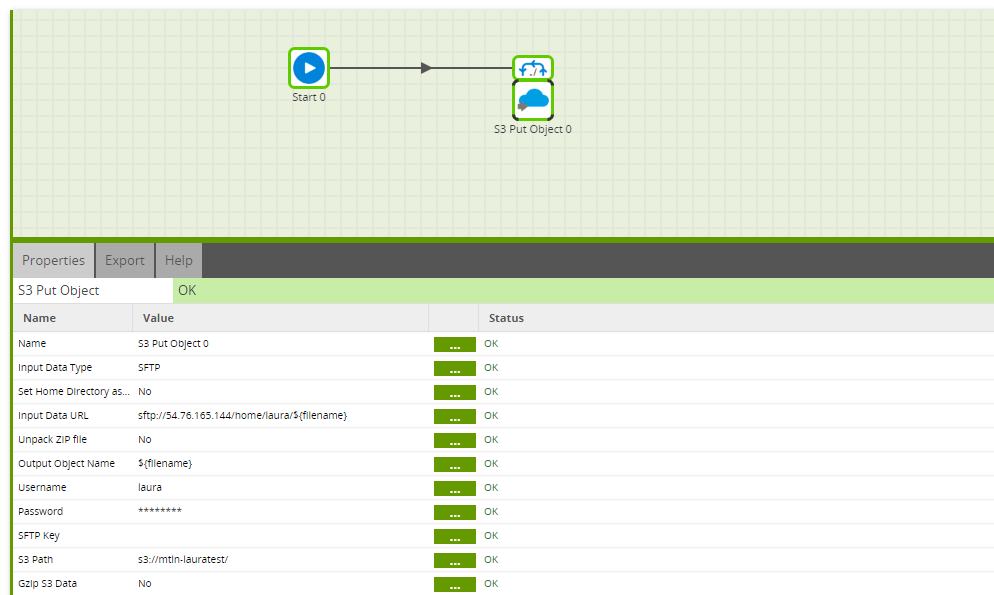

Copying More Files

As mentioned above, the S3 Put Object will only copy one file at a time, however, this component can be used in conjunction with a File Iterator to loop through all files which exist on the sFTP site and the S3 Put Object can be used to copy these files to the target S3 Bucket. This is configured by setting up the File Iterator to write the filename to a variable and then using the variable values in the S3 Put Object in the Input Data URL and as the Output Object Name. Further details on the File Iterator Component is available here.

Conclusion

We have looked at how to transfer data in a file from an external source to an S3 Bucket using Matilion. Once the file is available in the S3 Bucket you can load the data into Snowflake using the S3 Load Component. You can then use the power of Snowflake to transform and analyse the data.

Useful Links

S3 Put Object component in Matillion ETL for Snowflake

Integration information

Video

Begin your data journey

Want to try the S3 Put Object component in Matillion ETL for Snowflake? Arrange a free 1-hour training session now, or start a free 14-day trial.

Featured Resources

Custom Connector for Public Statistics (Police API)

A demonstration of the practical steps for setting up components, manipulating and validating data, creating and scheduling ...

BlogMastering Git At Matillion. An In-Depth Guide To Merging

Merging in Git is the process of integrating changes from one branch into another. Merging is the backbone of collaboration in ...

BlogWhat is an API?

What is an API? An API is an application programming interface that is a way for two or more computer programs or components ...

Share: