- Blog

- 08.09.2023

- Dev Dialogues, Data Productivity Cloud 101

A Guide to Connecting the Data Productivity Cloud Pipeline Designer to Snowflake

This guide shows how to connect the Matillion Data Productivity Cloud Pipeline Designer to a Snowflake cloud data platform account.

For Data Productivity Cloud pipelines, the metadata for connecting to Snowflake is held in an artifact known as an Environment. Let’s start with how to configure a Data Productivity Cloud Environment.

Prerequisites

The prerequisites for connecting the Matillion Data Productivity Cloud Pipeline Designer to Snowflake are:

- Access to the Pipeline Designer service via your Matillion Hub account

- You must already have at least one running ETL Agent for Designer. Either a Matillion hosted agent or a customer hosted agent

- If you have implemented Snowflake network policies, they must permit inbound access from the Matillion Data Productivity Cloud

- Snowflake credentials:

- Snowflake account identifier

- Snowflake username and password

- If you are using your own ETL Agent, you must have previously saved your Snowflake user's password in a Secret Definition with your cloud provider

Your chosen Snowflake user must have the following privileges:

- USAGE on a Virtual Warehouse

- Write access to a schema in a database

Snowflake account identifiers

The preferred way to uniquely identify a Snowflake account is to use your Organization name and your Account name.

Your unique Snowflake account identifier is the two identifiers separated by a hyphen: ORGNAME-AccountName |

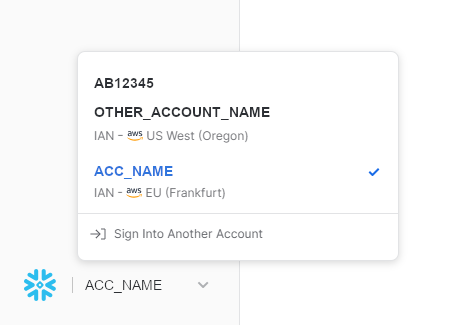

If you are using Snowsight, you can find your Organization name and Account name at the bottom left of the screen. In the screenshot below the Organization name is AB12345 and the Account name is ACC_NAME.

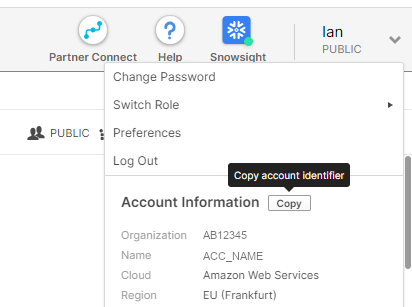

If you are still using the Snowflake classic console, you can find your Organization name and Account name under your login name at the top right of the screen. In the screenshot below the Organization name is AB12345 and the Account name is ACC_NAME.

Note that the “Copy” button in the classic console saves your Organization name and Account name separated with a period (.) not a hyphen. You must change it and use a hyphen to separate the two names.

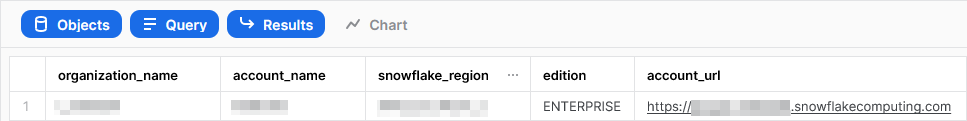

If you are using a third party SQL client, you can find your Organization name and Account name by running a SHOW ORGANIZATION ACCOUNTS query.

This query requires access to the ORGADMIN role. If you do not have this, ask your Snowflake account administrator for the details.

Some Snowflake Organizations have multiple Accounts. You can always find your Account name by using the CURRENT_ACCOUNT Snowflake function in a SQL query.

Legacy Snowflake account identifiers

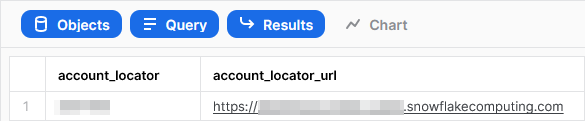

There is an older, alternative method that also uniquely identifies a Snowflake account. This is to use your account locator, plus a region and a cloud identifier.

The easiest way to find these identifiers is also to run a SHOW ORGANIZATION ACCOUNTS query. The information you will need is shown in the account_locator_url column, between the https:// and the snowflakecomputing.com part.

How to create a Data Productivity Cloud Environment connection to Snowflake

There are two ways to enter the Create an Environment dialog:

- When you create a new Project, creating an Environment is part of that

- Navigate into an existing Project from the Your Projects screen. Then follow the Environments tab, and press the Add new environment button.

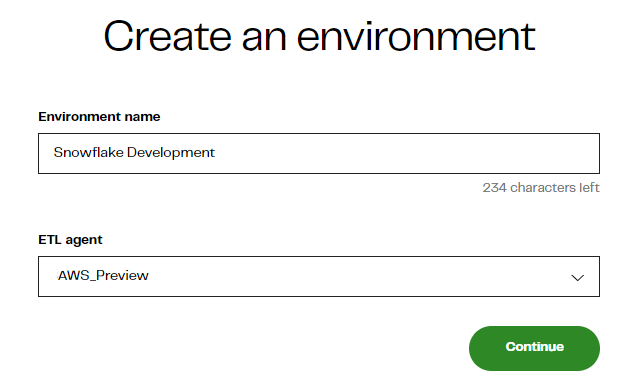

Either way, the Create an Environment dialog will ask for a name and an ELT Agent.

- Choose an Environment name that distinguishes the permissions boundary - such as your project name

- Choose a running ETL Agent for Designer

If you are getting started with a Matillion-hosted ETL Agent, you will see a screen like this after pressing Continue:

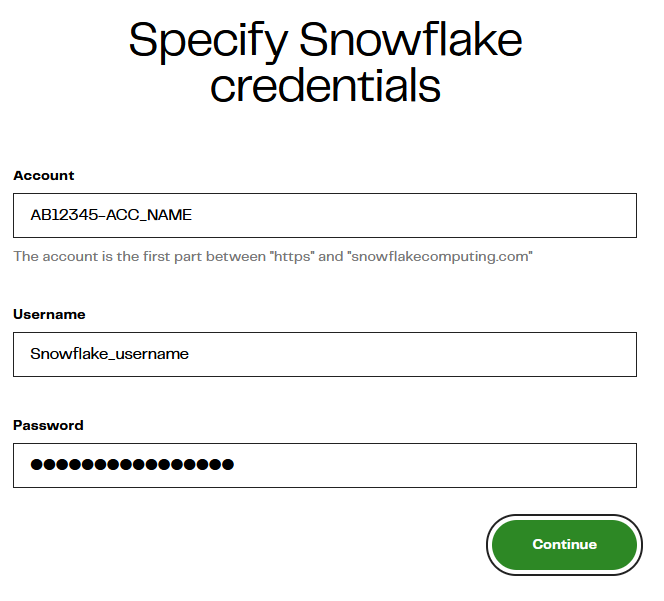

After that, you will need to type your Snowflake account identifier, plus the username and password.

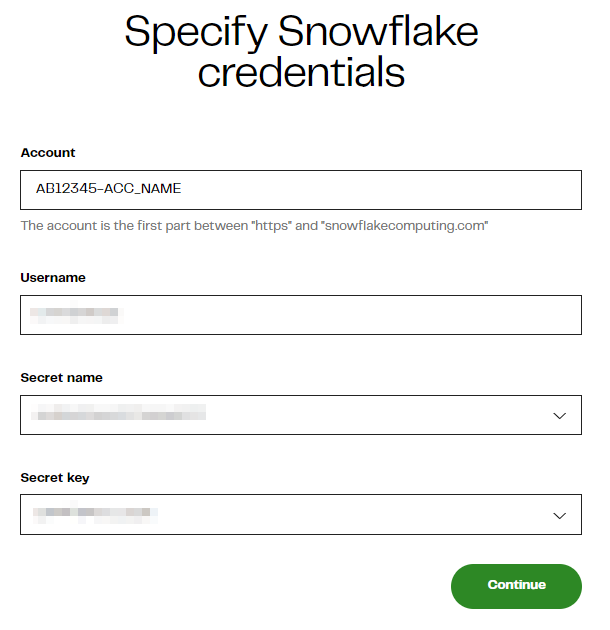

If you are using your own ETL Agent, the screen is nearly the same, except your Snowflake user's password is taken from a Secret Definition that you created previously with your cloud provider.

- For AWS, the password is held in a key within a Secret Definition of type "other". You must provide the name of the Secret and the name of the Key.

Press Continue to test the connection. If you hit a "Failed to connect to Snowflake" communication error with HTTP status 403, then check your Snowflake account identifier and your Snowflake network policies.

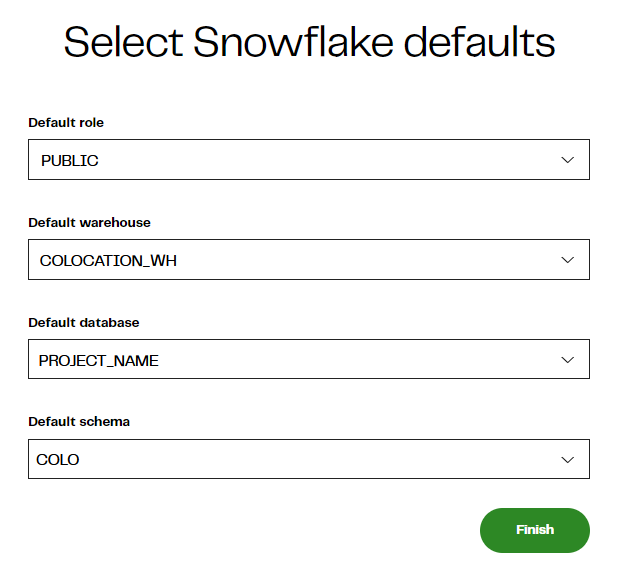

On the next screen, you will need to supply a Default Role, Virtual Warehouse, Database and Schema. The prompts cascade, so start at the top and work downwards.

If any of the prompts are blank, and you cannot select a value, your Snowflake user is insufficiently privileged.

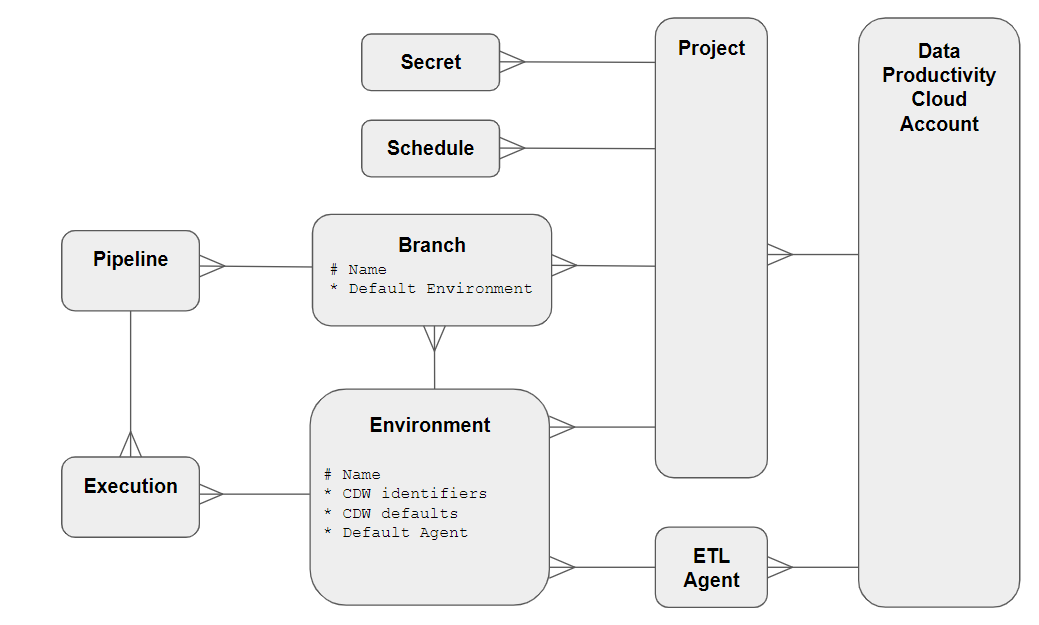

Connectivity metamodels for the Data Productivity Cloud and Snowflake

Within a Matillion Data Productivity Cloud account, you create Projects and one or more ETL Agents.

- Projects are intended to group work by subject area

- ETL Agents are in the data plane, where pipelines are executed at runtime.

- ETL Agents are also known as "Agents for Designer" to distinguish them from CDC Agents.

All pipeline development happens within a code version Branch. Pipelines run in the context of an Environment, which provides the Snowflake connection information.

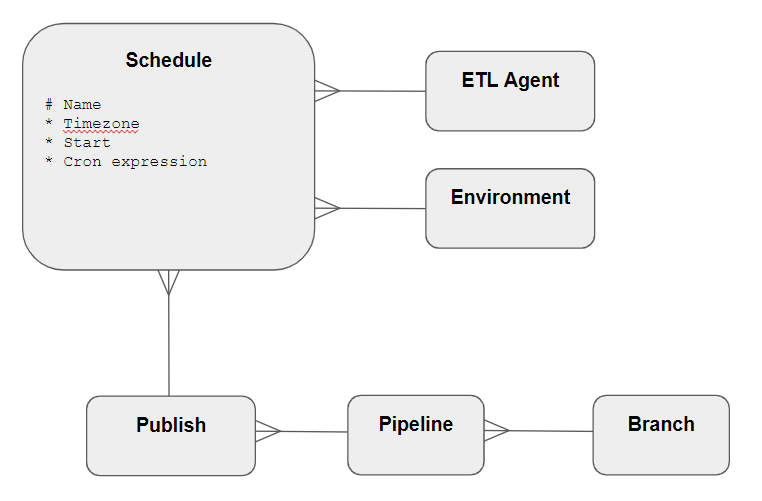

To create a Schedule, you must first commit and publish a pipeline. Publication copies the branch to the default ETL Agent, and makes all pipelines in that branch available for scheduling.

Scheduled jobs run on a chosen ETL Agent, using a chosen Environment.

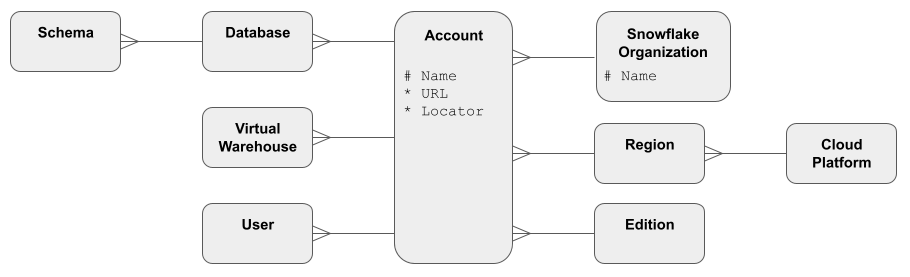

For Snowflake, the core administrative entity is the Account. Multiple accounts can be managed by one Snowflake Organization, although in many cases there is just one.

Every Snowflake account can be uniquely identified by a combination of either:

- Organization Name + Account Name (preferred), or

- Locator + Region + Cloud

The symbols in these diagrams are described in this article on data modeling techniques.

Learn more about the Matillion Data Productivity Cloud by signing into the hub or view an interactive demo.

Ian Funnell

Data Alchemist

Ian Funnell, Data Alchemist at Matillion, curates The Data Geek weekly newsletter and manages the Matillion Exchange.

Featured Resources

What Are Feature Flags?

Feature flags are a software development tool that has the capability to control the visibility of any particular feature. ...

BlogHow Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

Share: