- Blog

- 08.17.2018

- Data Fundamentals

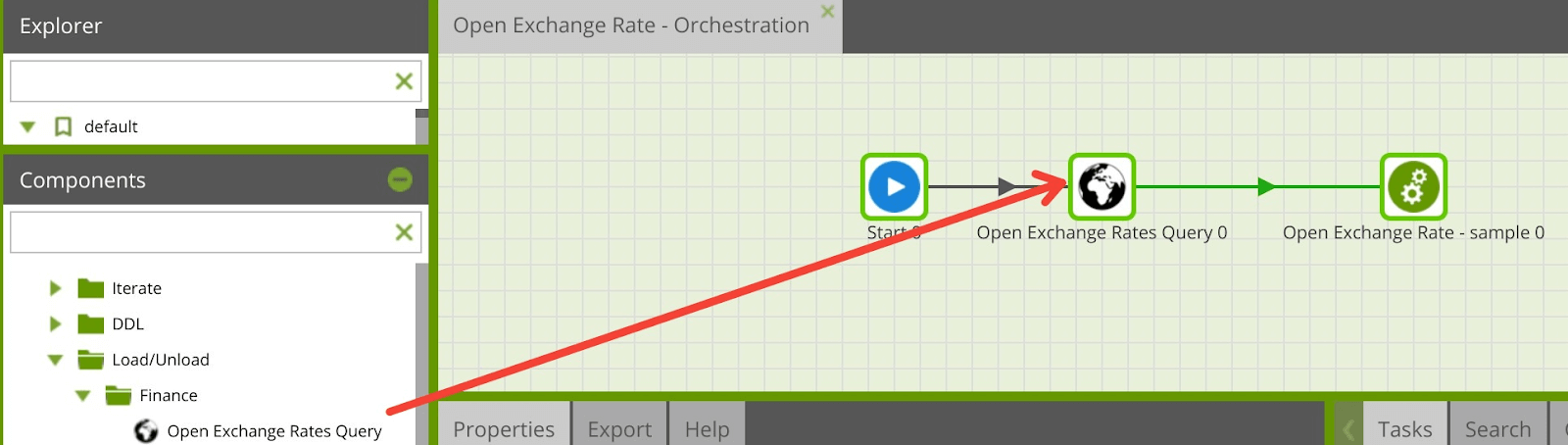

Using the Open Exchange Rates Query Component in Matillion ETL for Snowflake

Matillion uses the Extract-Load-Transform (ELT) approach to delivering quick results for a wide range of data processing purposes: everything from customer behavior analytics, financial analysis, and even reducing the cost of synthesizing DNA.

The Open Exchange Rates Query component presents an easy-to-use graphical interface, enabling you to pull data from the Open Exchange Rates API directly into Snowflake. This component is very popular with our customers to source live and historical currency exchange rate data, allowing for currency conversions of data loaded into Snowflake from additional external sources.

The connector is completely self-contained: no additional software installation is required. It’s within the scope of an ordinary Matillion license, so there is no additional cost for using the features.

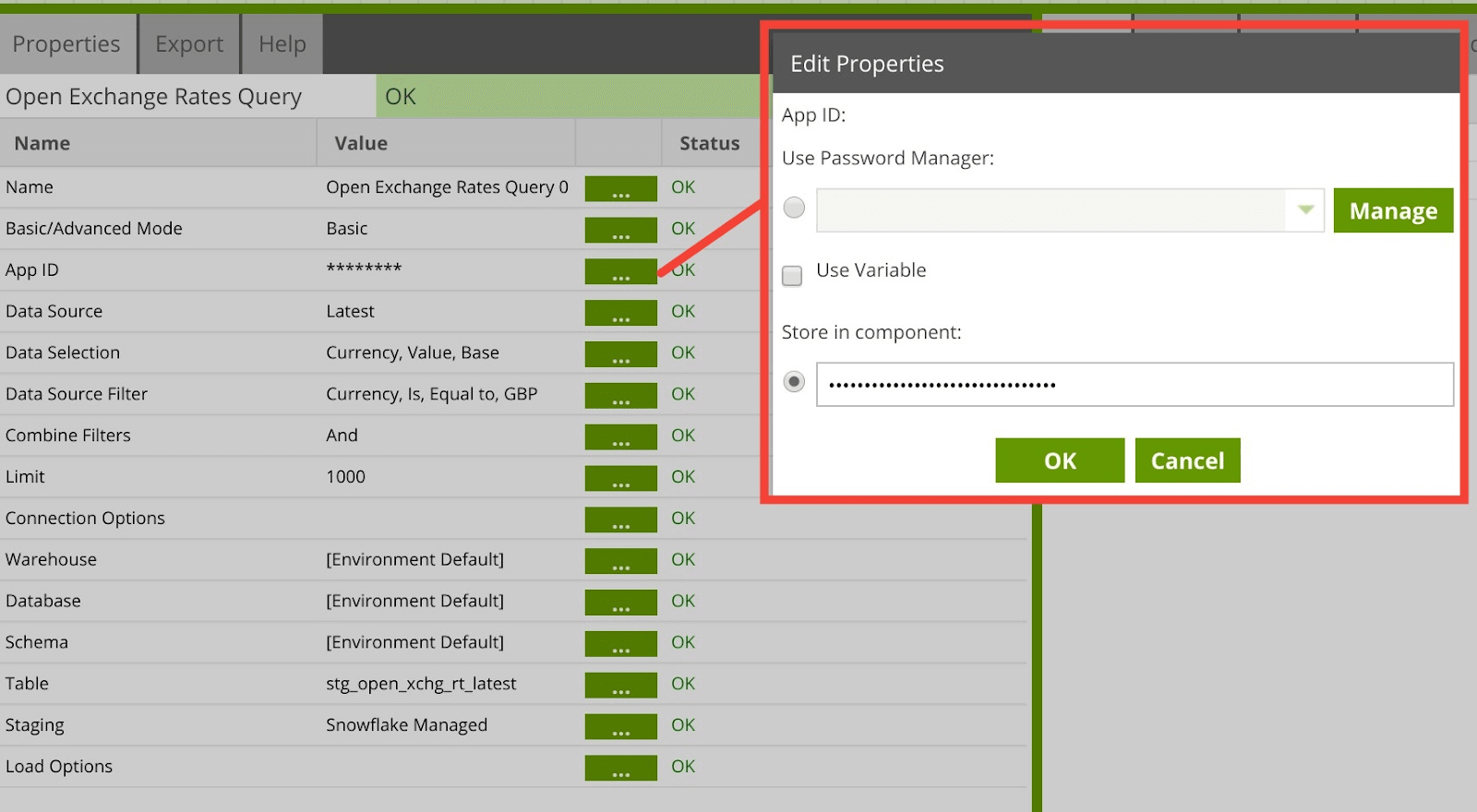

Authentication

Before using the Open Exchange Rates Query component, users are required to link to their Open Exchange Rates account via an App ID. This is the authentication required by Open Exchange Rates to utilize their APIs. See our article that describes how to Create a New Open Exchange Rates App ID.

Once an App ID has been created, it can be directly copied into the App ID field of the Open Exchange Rates Query component or used like a password through the Password Manager.

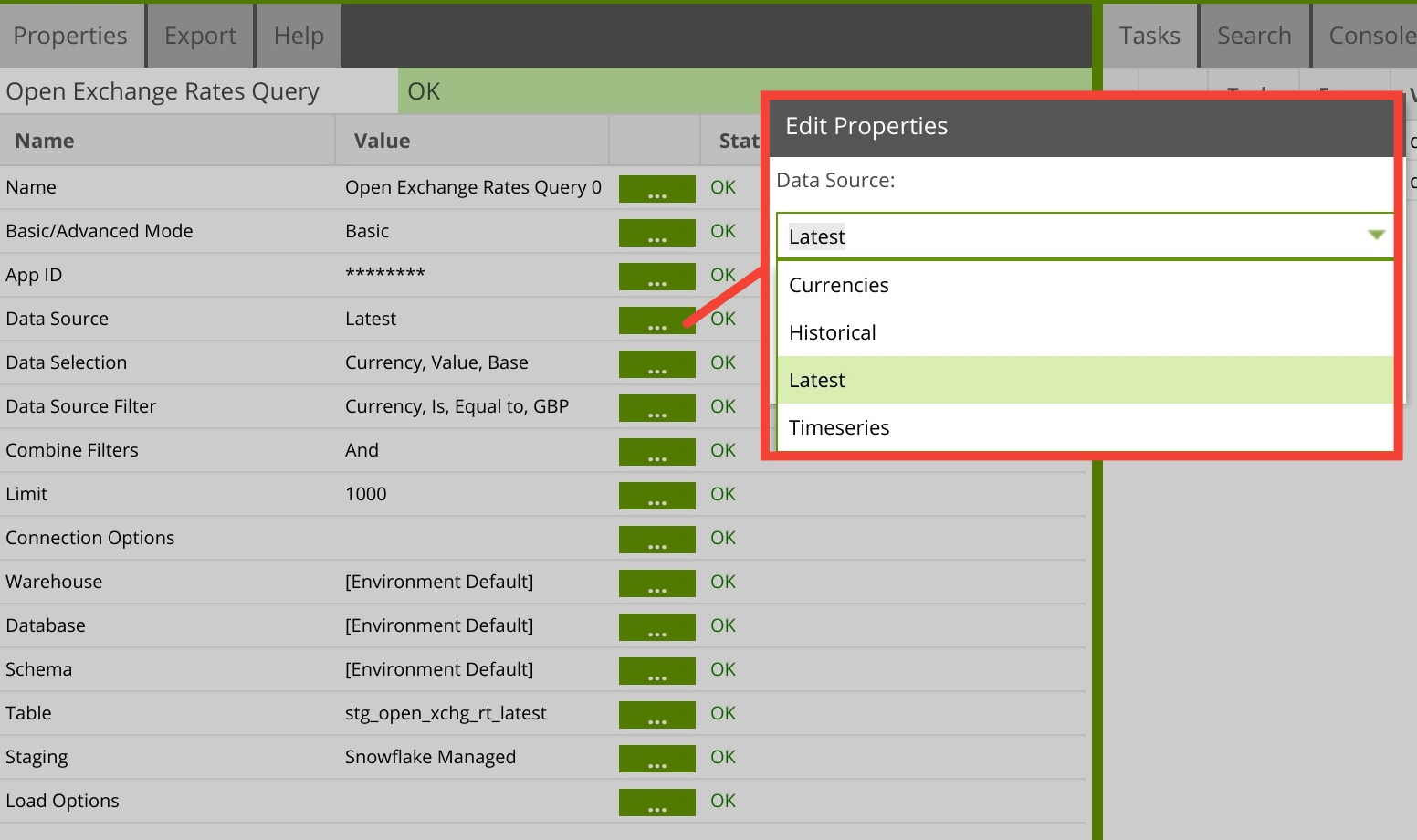

Data Source

Next, you can choose the data you want to load into Snowflake from the Data Source drop down. This is a list of the data sources available from Open Exchange Rates:

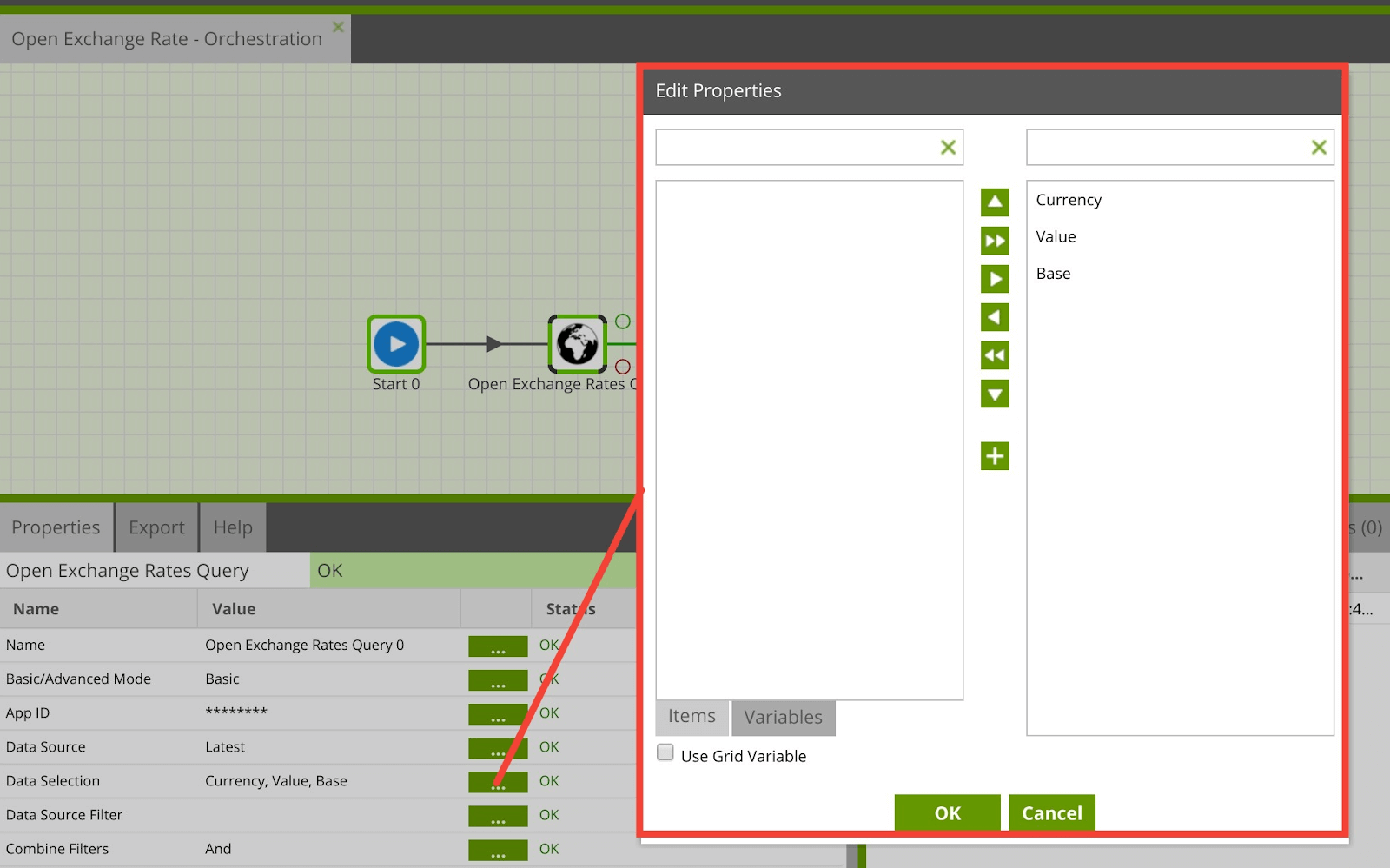

After choosing the data source, the next step is to choose the desired columns from the data source in the Data Selection. This will form the new table which is created in Snowflake.

Data Source Filter

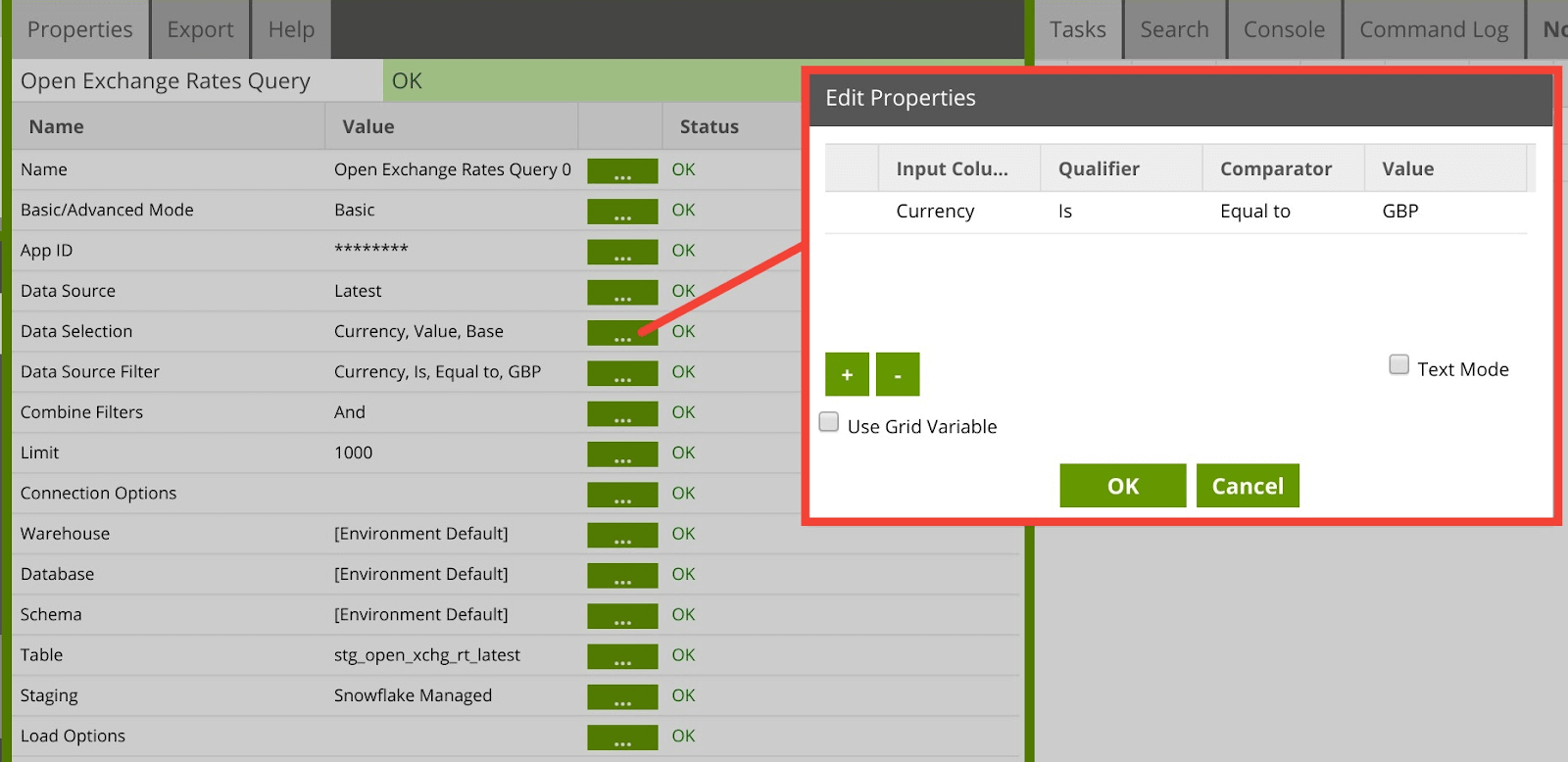

You can additionally add a filter to the data you are bringing through into Snowflake using the Data Source Filter.

Note: The “Limit” setting is applied before the Data Source Filter is applied.

Connection Options

The component has additional connection parameters you may want to explore. However, none of the Connection Options are mandatory and the Open Exchange Query component usually gives you sensible defaults. Should you wish to explore your Connection Options, further details on the options are available here.

Data Target

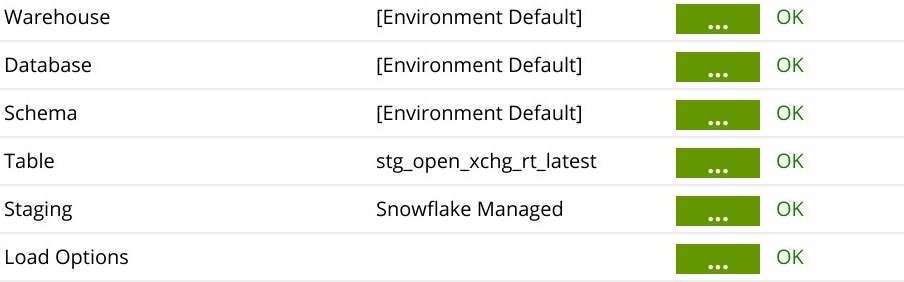

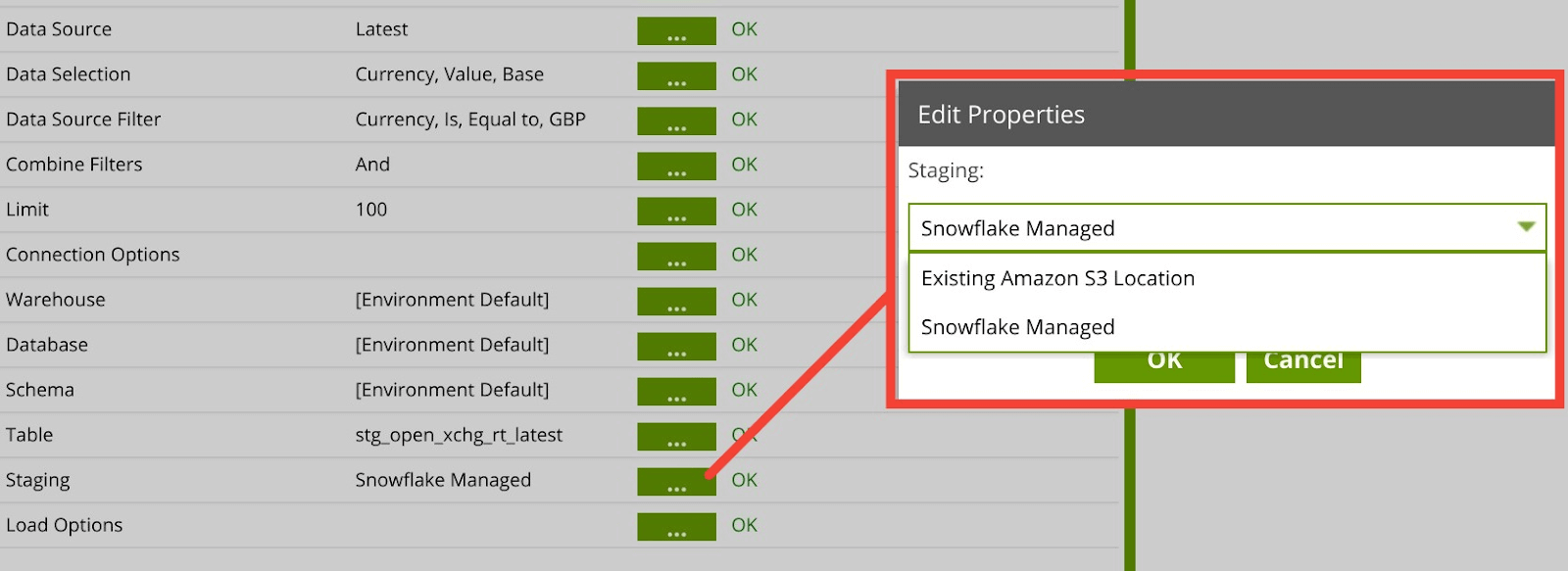

Next, you configure how you would like to load the selected data into Snowflake.

Specify a Target Table in which to load the data from Open Exchange Rates:

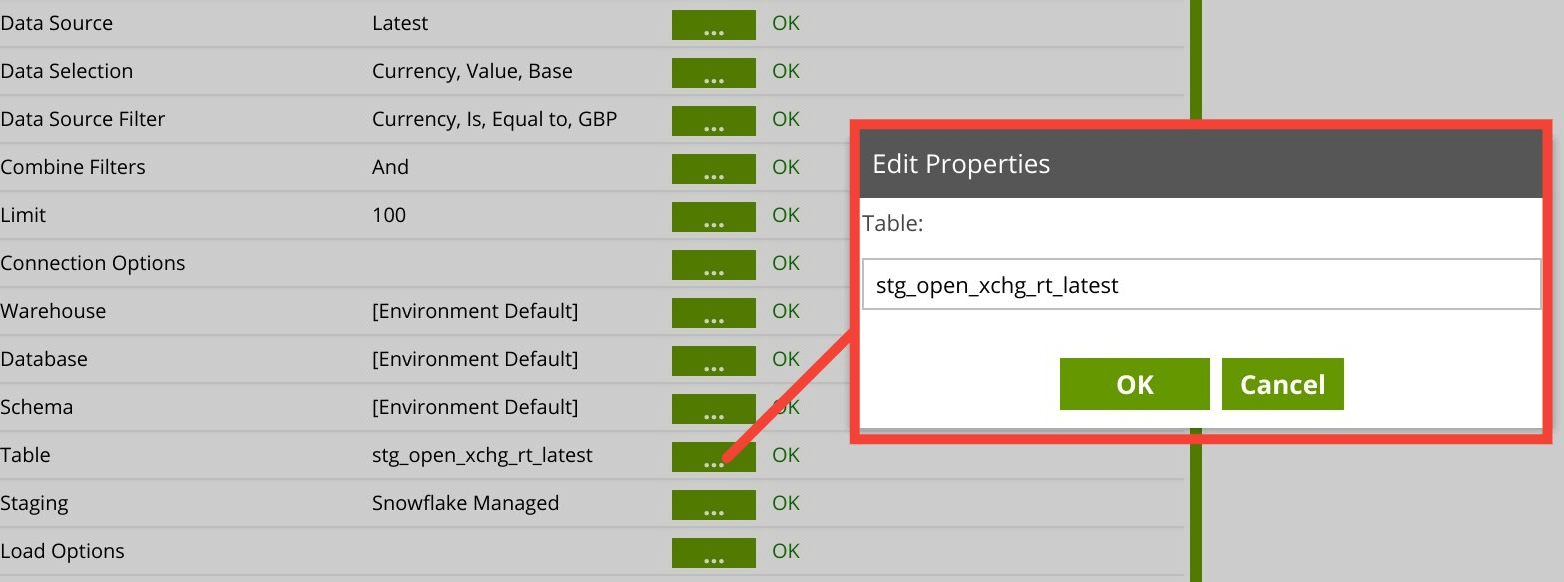

Specify an Existing Amazon S3 Location or Snowflake Managed in which to stage the data before loading into the defined Target Table in Snowflake. When selecting an Existing Amazon S3 Location, you will additionally need to provide an S3 Staging Area. When selecting Snowflake Managed, Matillion will create a temporary internal stage within Snowflake.

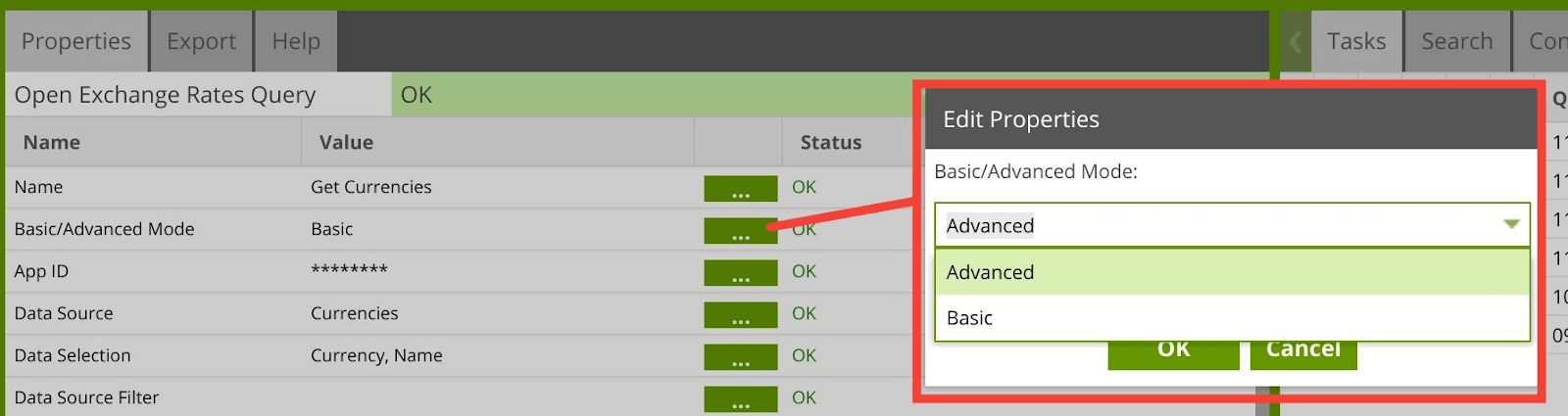

Basic/Advanced Mode

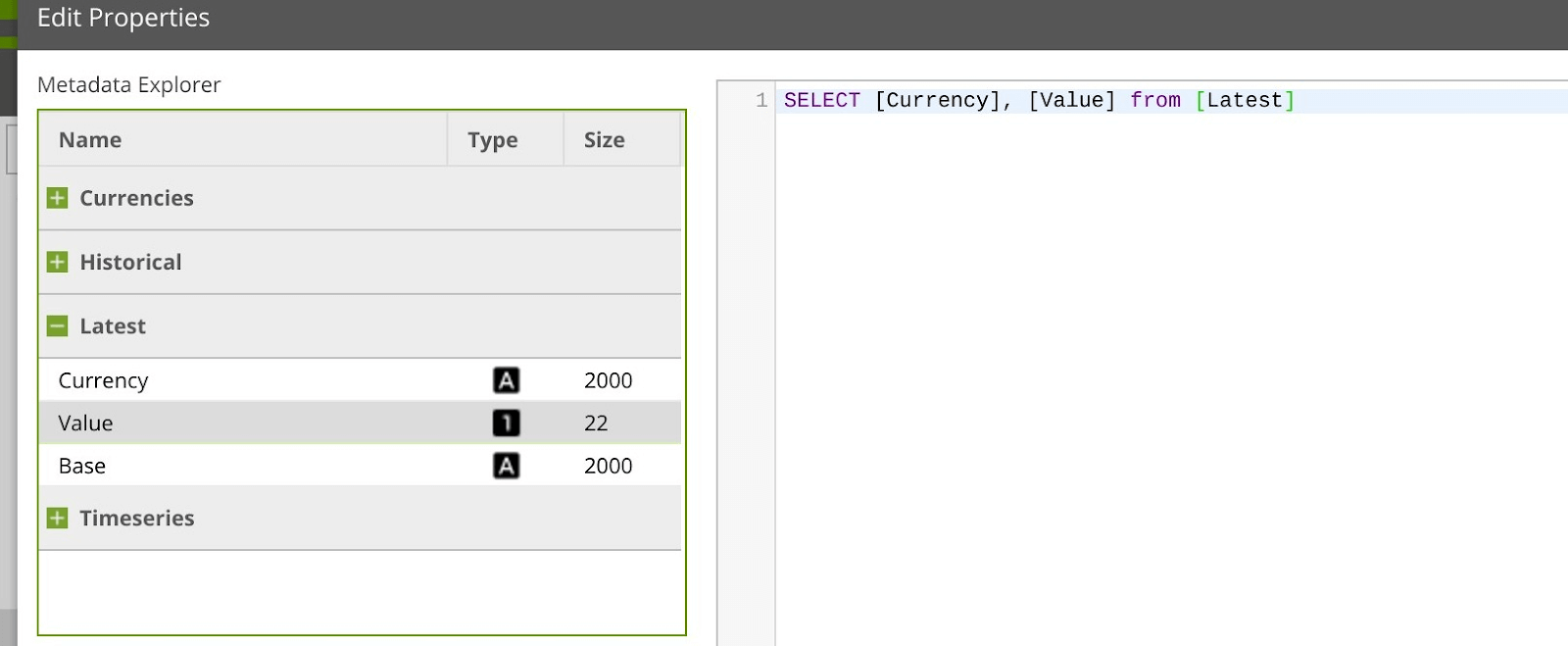

The Open Exchange Rates Query component offers an “Advanced” mode instead of the default “Basic” mode. In Advanced mode, you can write a SQL-like query over all the available fields in the data model. This is automatically translated into the correct API calls to retrieve the data requested.

Once set to Advanced, a SQL Query option becomes available and needs to be updated with the desired SQL Query:

Running the Open Exchange Rates Query component in Matillion ETL for Snowflake

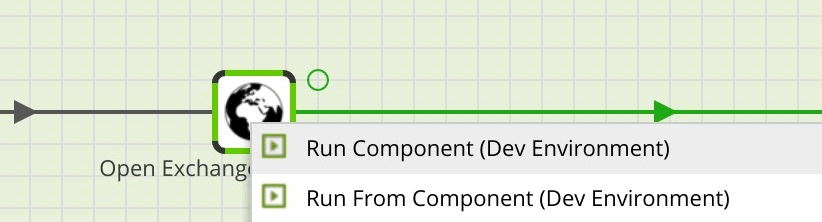

Once you have configured the minimum required properties, the outline color of the component will change to Green. At this point, you may test the component by right-clicking clicking on the component and choose Run Component. This will execute just that component and load any available data into the specified target table in Snowflake.

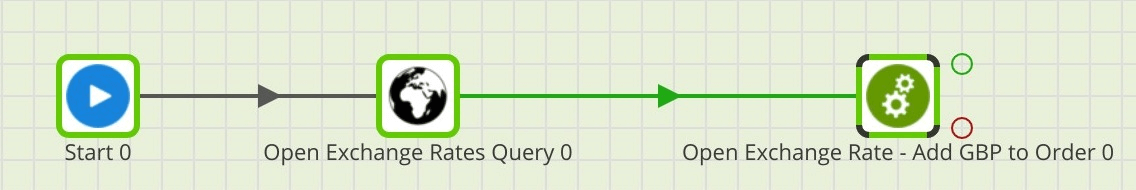

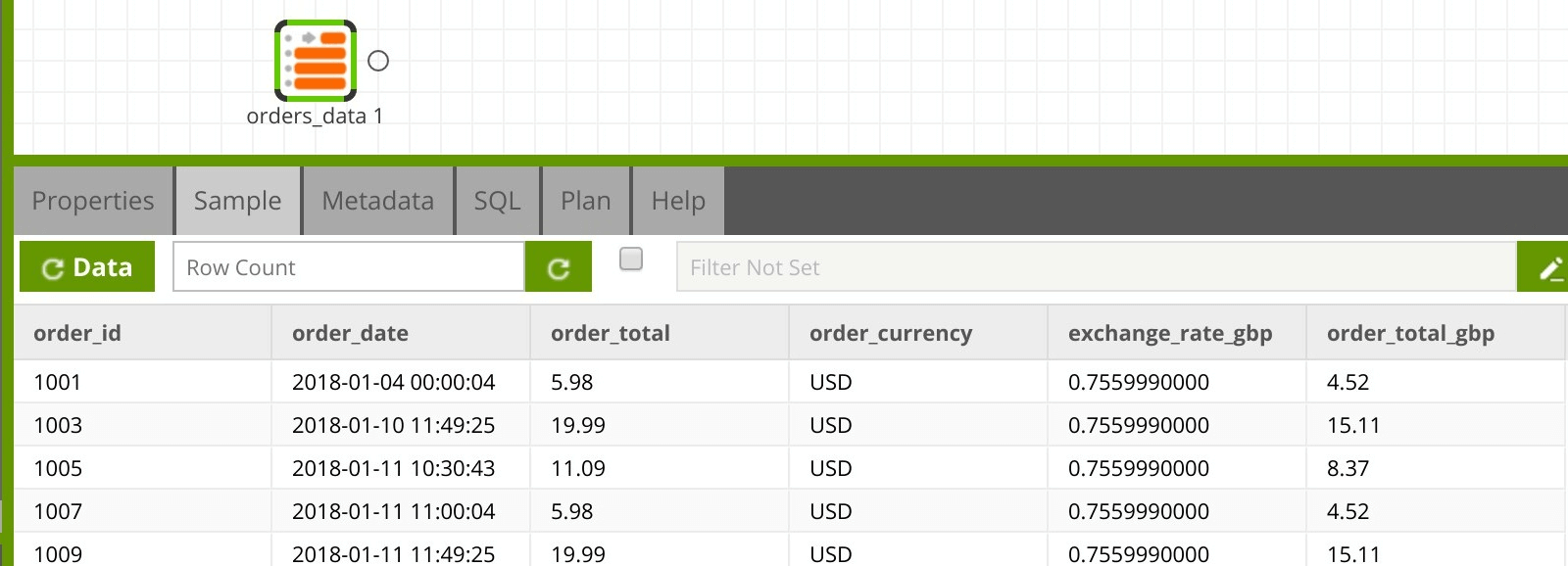

Transforming the Data

Once you have brought through the required data from the Open Exchange Rates Query component into Snowflake, you can use the data in a Transformation job to join with other data in Snowflake.

Useful Links

Open Exchange Rates Query in Matillion ETL for Snowflake

Open Exchange Rates Data Model

Open Exchange Rates 3rd Party OAuth

Featured Resources

What Are Feature Flags?

Feature flags are a software development tool that has the capability to control the visibility of any particular feature. ...

BlogHow Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

Share: