- Blog

- 09.14.2018

- Data Fundamentals

Using the QuickBooks Query component in Matillion ETL for Snowflake

Matillion uses the Extract-Load-Transform (ELT) approach to delivering quick results for a wide range of data processing purposes: everything from customer behavior analytics, financial analysis, and even reducing the cost of synthesizing DNA.

The QuickBooks Query component in Matillion ETL for Snowflake presents an easy-to-use graphical interface, enabling you to easily pull data from QuickBooks Online directly into Snowflake. Many of our customers are using this to integrate their company’s accounting and payment data with data from other areas of their business.

The connector is completely self-contained: no additional software installation is required. It’s within the scope of an ordinary Matillion license, so there is no additional cost for using the features.

Data Extraction

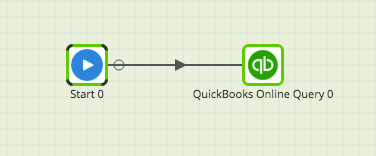

Start by creating an Orchestration job to extract the required data from QuickBooks Online. In the Components search bar type QuickBooks. Drag and drop the QuickBooks Online Query component onto the job canvas.

Authentication

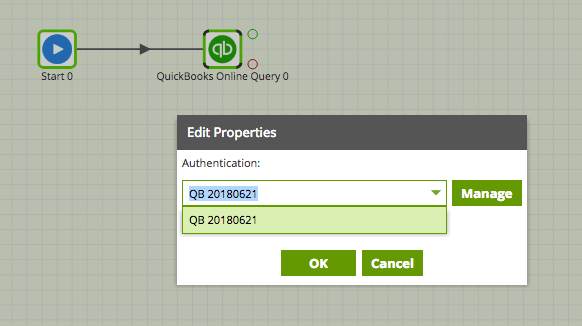

The QuickBooks Online Query supports OAuth authentication. The first step in configuring the Snowflake component is to provide the Authentication to QuickBooks Online. The Matillion QuickBooks Online Query component requires OAuth to be set up to authenticate Matillion to connect to QuickBooks Online data. Further details of configuring QuickBooks Online OAuth is available on our support center.

Clicking on the 3 dots next to the Authentication property will bring a pop-up box showing all available QuickBooks Online accounts:

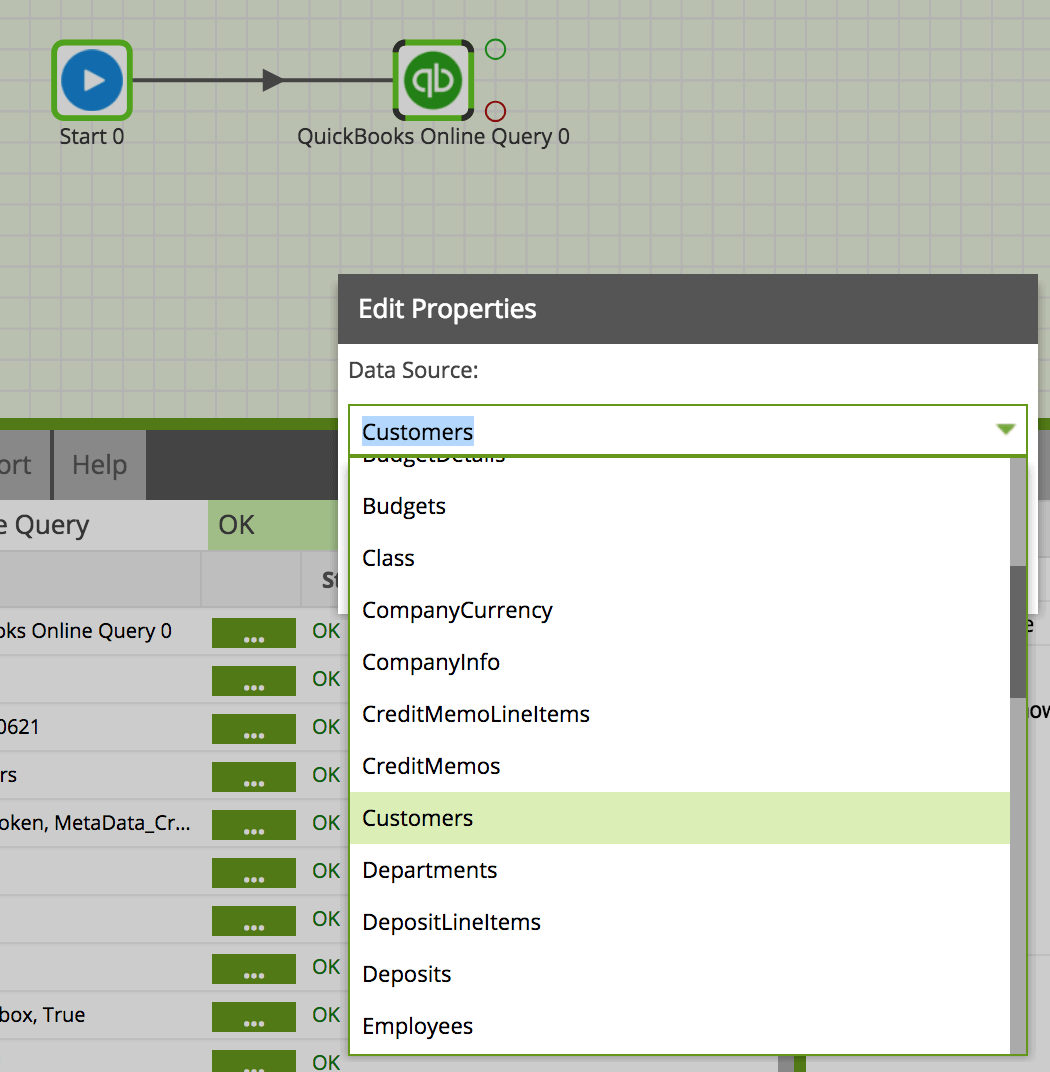

Data Source

Next, select the Data Source (table) you want to pull data from, displayed in the Data Source drop down.

Data Selection

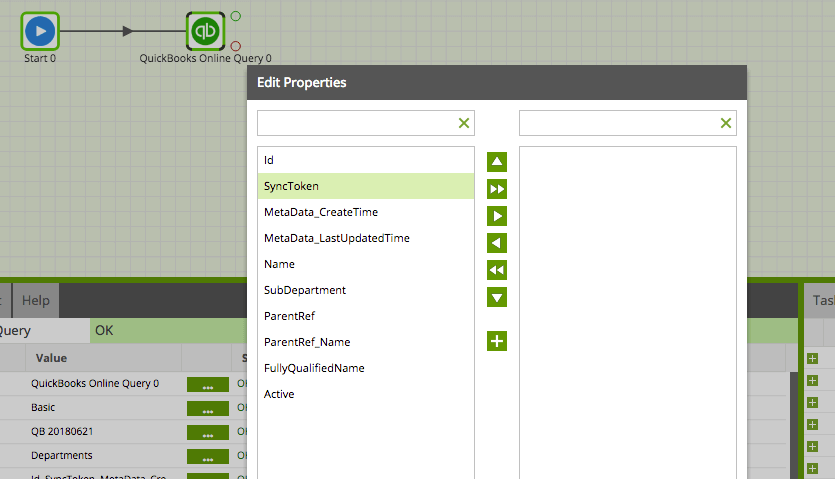

Select the columns from the data source that you are interested in. Matillion will supply a list of the columns available to you in the Data Source previously selected. This will form the new table which you will create in Snowflake with the help of Matillion.

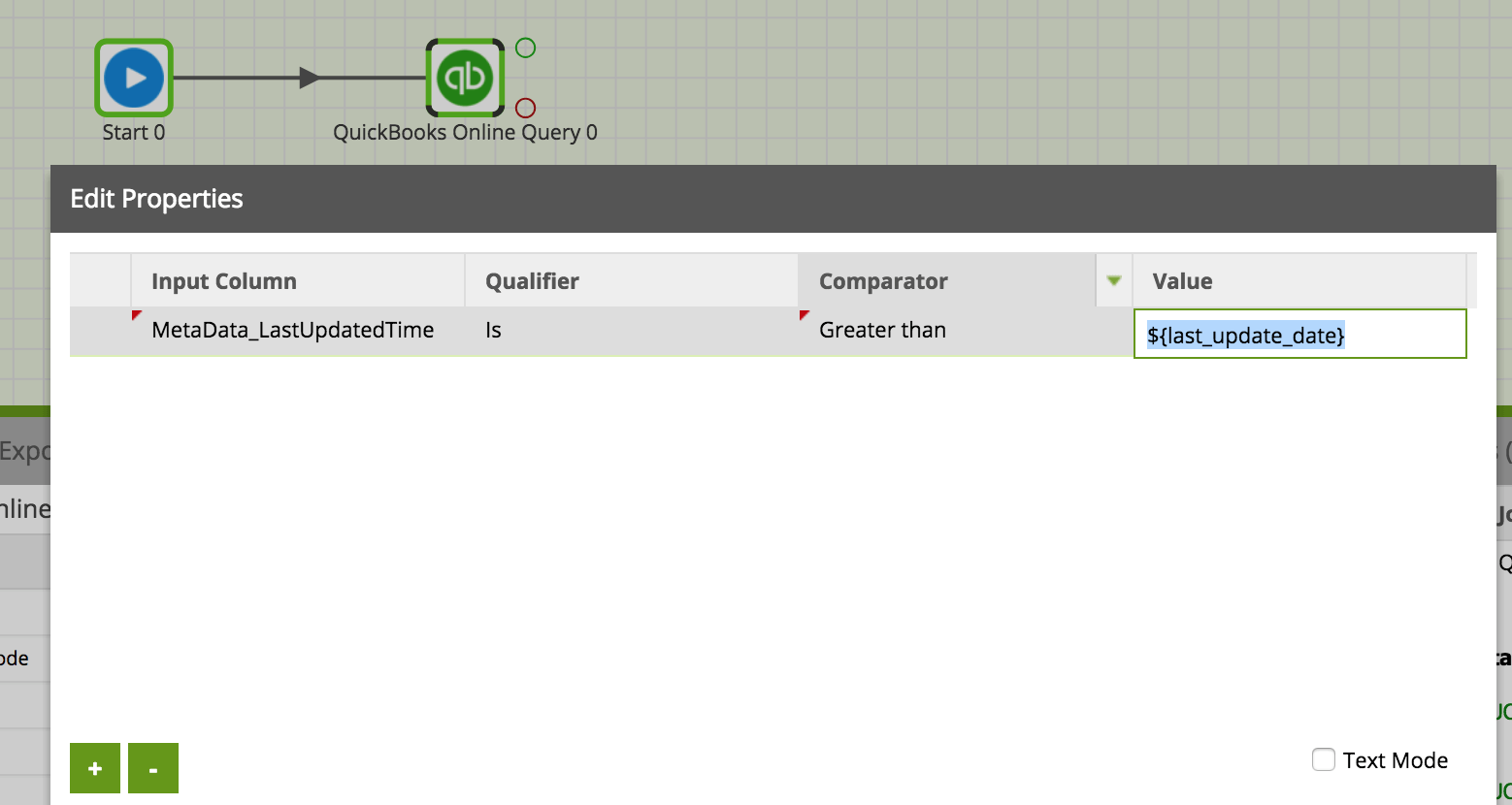

Data Source Filter

Leaving the data source filter empty will allow the query to return all rows (based on the setting in LIMIT). You may want to pull just the new records, for example, since the last time you pulled data in from this data source. If so, populate the data source filter accordingly. Here is an example of pulling just new rows based on a variable “last_update_date”:

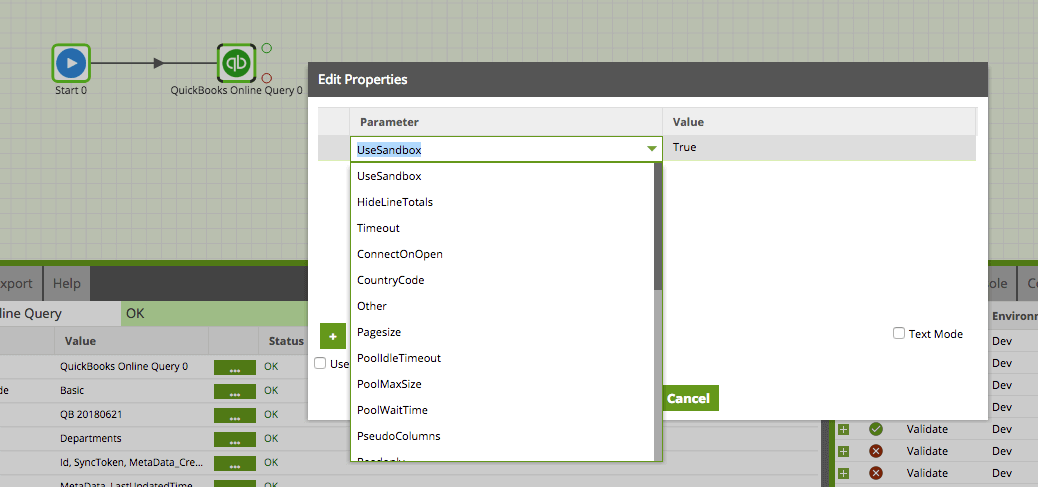

Connection Options

Several connection options are available for the QuickBooks Online Query. In particular, if you are connecting to a Sandbox of QuickBooks Online you’ll need to select ‘UseSandbox’ and set it to True since the default setting is False.

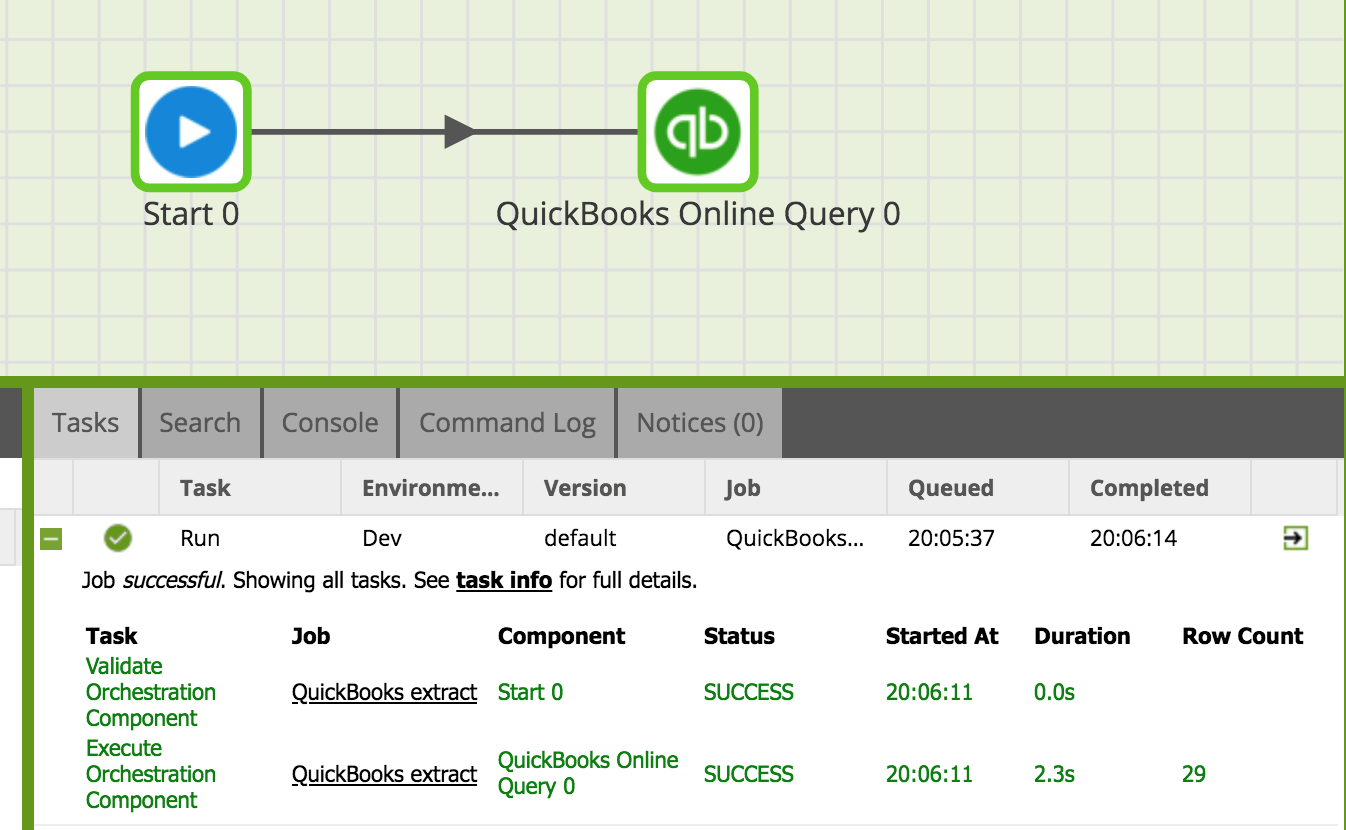

Running the QuickBooks Online Query component in Matillion ETL for Snowflake

Before you can run the component, you need to specify a Target Table name. This is the name of a new table that Matillion will create (or overwrite) to write the data into Snowflake. Also, an S3 Staging Area must be specified. This is an S3 bucket which is used to temporarily store the results of the query before it is loaded into Snowflake.

This component also has a Limit property which forces an upper limit on the number of records returned.

You can run the Orchestration job, either manually or by using the Scheduler, to query your data and bring it into Snowflake.

Transforming the Data/Transformation Job

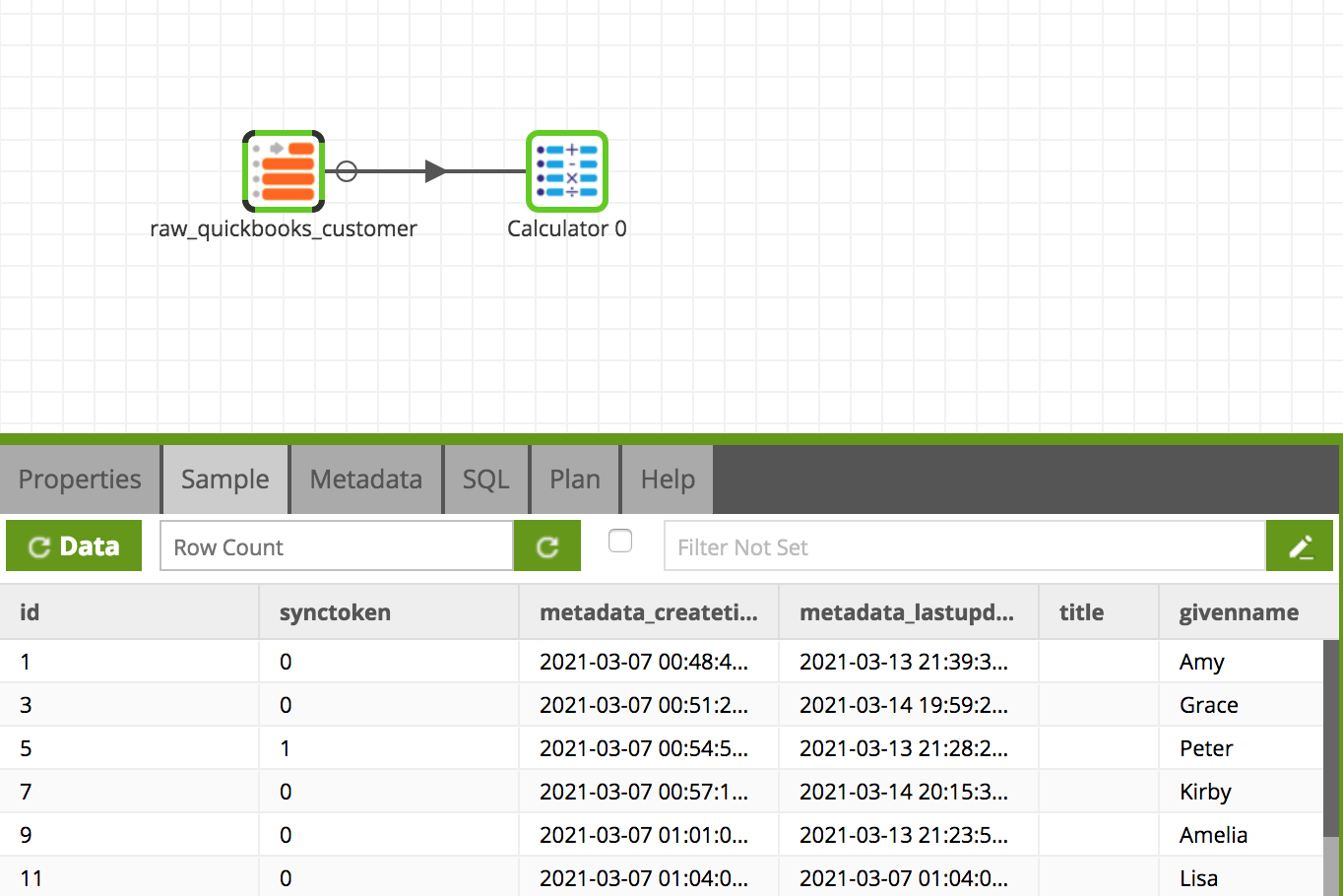

Once you have extracted your data from QuickBooks Online and loaded into a Snowflake table, you can start to transform the data.

In this way, you can build out the rest of your downstream transformations and analysis, taking advantage of Snowflake’s power and scalability.

Useful Links

QuickBooks Online Query Data Model

QuickBooks Online 3rd Party OAuth Setup

Begin your data journey

Try the Quickbooks Query component in Matillion ETL for Snowflake. Arrange a free demo, or start a free 14-day trial.

Featured Resources

What Are Feature Flags?

Feature flags are a software development tool that has the capability to control the visibility of any particular feature. ...

BlogHow Your Data Teams Can Do More With Marketing Analytics

Improve your marketing analytics with Matillion Data Productivity Cloud that enables businesses to centralize and integrate ...

BlogThe Importance of Data Classification in Cloud Security

Data classification enables the targeted protection and management of sensitive information. Personally Identifiable ...

Share: