- Blog

- 10.02.2017

- Data Fundamentals, Dev Dialogues

Using the Twitter Query Component in Matillion ETL for BigQuery

Matillion's "Twitter Query" component enables you to extract data from your Twitter account and load it into Google BigQuery.

The Twitter Query component in Matillion ETL for BigQuery uses the ETL principle to load data from diverse sources into one Google BigQuery dataset. From there, you can take advantage of BigQuery’s power and scalability to transform, join and aggregate the data into shape for reporting or analytics.

Matillion's "Twitter Query" component enables you to extract data from your Twitter account and load it into Google BigQuery.

The Twitter Query component in Matillion ETL for BigQuery uses the ETL principle to load data from diverse sources into one Google BigQuery dataset. From there, you can take advantage of BigQuery’s power and scalability to transform, join and aggregate the data into shape for reporting or analytics.

Video

Watch our tutorial video for a demonstration on how to set up and use the Twitter Query component in Matillion ETL for BigQuery. https://youtu.be/-qSn_SuCjKcData extraction architecture

Similar to most of Matillion’s Load/Unload components, acquiring data from Twitter is a two stage process. Stage 1

Matillion connects to Twitter’s API using OAuth credentials. Before using the Twitter Query component you’ll need to set up your OAuth credentials; this is a once-only setup task. Once authenticated, Matillion brings the data from Twitter across the network.

Stage 2

Matillion instructs BigQuery to bulk-load the extracted data. This is the fastest way to load the Twitter data into BigQuery from end to end.

Stage 1

Matillion connects to Twitter’s API using OAuth credentials. Before using the Twitter Query component you’ll need to set up your OAuth credentials; this is a once-only setup task. Once authenticated, Matillion brings the data from Twitter across the network.

Stage 2

Matillion instructs BigQuery to bulk-load the extracted data. This is the fastest way to load the Twitter data into BigQuery from end to end.

Data Orchestration

The Twitter Query is a data Orchestration component. To use the Twitter Query component you need to create an Orchestration job and edit it. Locate the Twitter Query in the component list and drag it onto the Orchestration job canvas to edit it. It’s always best to work down the property list from top to bottom. Some of the key setting are as follows:

It’s always best to work down the property list from top to bottom. Some of the key setting are as follows:

- Authentication - set this to the name of the OAuth credentials you intend to use

- Data Source - choose a table or view from those available in the Twitter data model. There are about 20 of the main elements in the Twitter data model, including Tweets, Direct Messages, Followers and Lists

- Data Selection - choose the columns, depending on which Data Source you’re using

- Data Source Filter - you should normally enter some filters to restrict the data of interest

- Target Table - choose a name for the new BigQuery table. Note this is a “staging” table, so you’ll need to move the data on after loading (see the next section

- Cloud Storage Staging Area - choose one of your existing buckets. It will be used temporarily during the bulk load (stage 2, as mentioned above)

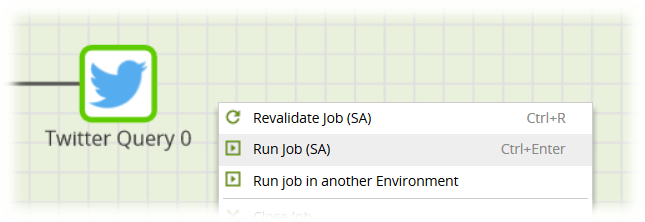

Running the Extract and Load

Matillion has a built-in scheduler, which you can use to run jobs automatically. During testing and development, however, you’ll probably just want to run it interactively with a right-click on the canvas. The actual run-time will vary according to the complexity of the query, how much data is returned, and whether Twitter apply rate limiting

Once it’s working, you can add this Orchestration job into your daily schedule and the new BigQuery table will be dropped and recreated every day, containing all the newly matching data.

The actual run-time will vary according to the complexity of the query, how much data is returned, and whether Twitter apply rate limiting

Once it’s working, you can add this Orchestration job into your daily schedule and the new BigQuery table will be dropped and recreated every day, containing all the newly matching data.

Staging and Transformations

The Target Table property you chose is the name of a BigQuery “staging” table. That means it will be recreated every time the Twitter Query component runs. If the table already exists, it will be silently dropped first. It’s deliberately designed this way so you can do incremental loads and take advantage of BigQuery’s fast bulk loader. However, it does mean that you need to copy the newly-loaded data into a more permanent location after every load. The usual pattern is to call a new Transformation job immediately after the Load. Data returned from an API will very likely not conform to the required schema. Transformations can now be introduced to cleanse the data: fixing datatypes and removing duplicates.

Matillion’s Data Quality Framework document is a detailed guide for this, but a typical example would be to avoid loading the same transactional data (tweets, for example) more than once.

Data returned from an API will very likely not conform to the required schema. Transformations can now be introduced to cleanse the data: fixing datatypes and removing duplicates.

Matillion’s Data Quality Framework document is a detailed guide for this, but a typical example would be to avoid loading the same transactional data (tweets, for example) more than once.

In the above example, the newly-loaded staging data (stg_tweets) is left-joined to the permanent table (master_tweets) by the unique ID of every record. This join returns the same records from stg_tweets, with one extra ID column from the master_tweets table.

Records where the master ID is null are currently missing from the master table and can be inserted. The Filter performs this check using an “Is Null or blank” clause, and it has the effect of an antijoin. The final Table Output component is then safe to run, with its write disposition set to Append, and will not add duplicates.

Having cleansed the data in this way, it’s then ready to be blended in with data from other sources.

In the above example, the newly-loaded staging data (stg_tweets) is left-joined to the permanent table (master_tweets) by the unique ID of every record. This join returns the same records from stg_tweets, with one extra ID column from the master_tweets table.

Records where the master ID is null are currently missing from the master table and can be inserted. The Filter performs this check using an “Is Null or blank” clause, and it has the effect of an antijoin. The final Table Output component is then safe to run, with its write disposition set to Append, and will not add duplicates.

Having cleansed the data in this way, it’s then ready to be blended in with data from other sources.

Useful Links

Twitter Query Component in Matillion ETL for BigQuery Component Data Model OAuth Set Up Integration information VideoBegin your data journey

Want to try the Twitter Query component in Matillion ETL for BigQuery? Request a free demo now, or launch on the Google Cloud Launcher.

Ian Funnell

Data Alchemist

Ian Funnell, Data Alchemist at Matillion, curates The Data Geek weekly newsletter and manages the Matillion Exchange.

Featured Resources

Blog

10 Cost Optimization Tips for Snowflake

How to save costs using Snowflake.

Learn more BlogSchema Drift and Dynamic S3 Files

This article is part 2 of a three-part series on schema drift ...

Learn more BlogBest Practices For Connecting Matillion ETL To Azure Blob Storage

Matillion makes data work more productive by empowering the entire ...

Learn more

Share: